作为一项供开发人员测试混合现实动态遮挡的实验性功能,Meta推出了Quest 3的深度API。

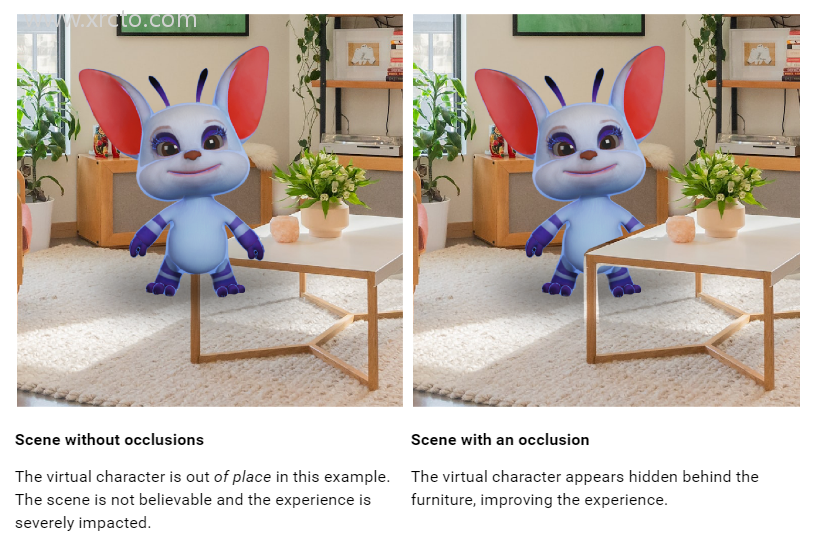

In our review of the Quest 3, we heavily criticized the lack of mixed reality dynamic occlusion. Virtual objects can appear behind a rough scene mesh generated by a scan of the room setup, but they will always appear in front of moving objects, even at greater distances, which looks abrupt and unnatural.

Developers can already implement dynamic occlusion of hands by using hand tracking meshes, but few do because it creates a noticeable gap at the wrist, preventing the rest of the arm from being included.

The new depth API provides developers with a frame-by-frame coarse depth map generated by the headset from its perspective. This can be used to implement occlusion, whether it's moving objects like people, or static objects where detail may not be captured due to the coarse scene mesh.

Dynamic occlusion should make mixed reality on the Quest 3 look more realistic. However, the headset's depth sensing resolution is lower, so it can't pick up the space between your fingers, and you'll see a gap at the edge of the furniture.

Depth maps are also only recommended for use within 4 meters, after which "accuracy will decrease significantly," so developers may also want to use scene meshes to achieve static occlusion.

Developers can implement occlusion in two ways: hard occlusion and soft occlusion. Hard occlusion is slightly easier to achieve, but has jagged edges, while soft occlusion is more difficult to achieve, has a GPU cost, but has better results. Looking at Meta's example video, it's hard to imagine any developer choosing hard occlusion.

In either case, occlusion needs to be implemented specifically using Meta's special occlusion shader or in a custom shader. This is far from a one-click solution, and supporting this feature can require considerable effort for developers.

In addition to occlusion, developers can use the depth API to implement depth-based visual effects such as fog in mixed reality.

Currently, using the Deepin API requires enabling experimental features on the Quest 3 headset by running the following ADB command:adb shell setprop debug.oculus.experimentalEnabled 1

Unity developers must also use an experimental version of the Unity-specific XR Oculus package and must use Unity 2022.3.1 or higher.

You can findhereFind Unity's documentation, andhereFind Unreal's documentation.

As an experimental feature, apps built using the Deepin API cannot yet be uploaded to the Quest Store or App Lab, so developers must now use other distribution methods such as SideQuest to share implementations. Meta typically upgrades experimental features to production features in subsequent SDK releases.