Apple patent provides solution for asymmetric environment presentation between AR/VR and mobile phones

CheckCitation/SourcePlease click:XR Navigation Network

Indicates environmental asymmetry

(XR Navigation Network November 17, 2023) XR sessions can be accessed across a range of devices. For example, a device like a headset can show an XR view of a session, while other devices like a smartphone will only show an XR view.appleThe XR view and the non-XR view are called "Asymmetric presentation of an environment. The corresponding presentation method is proposed in the patent application.

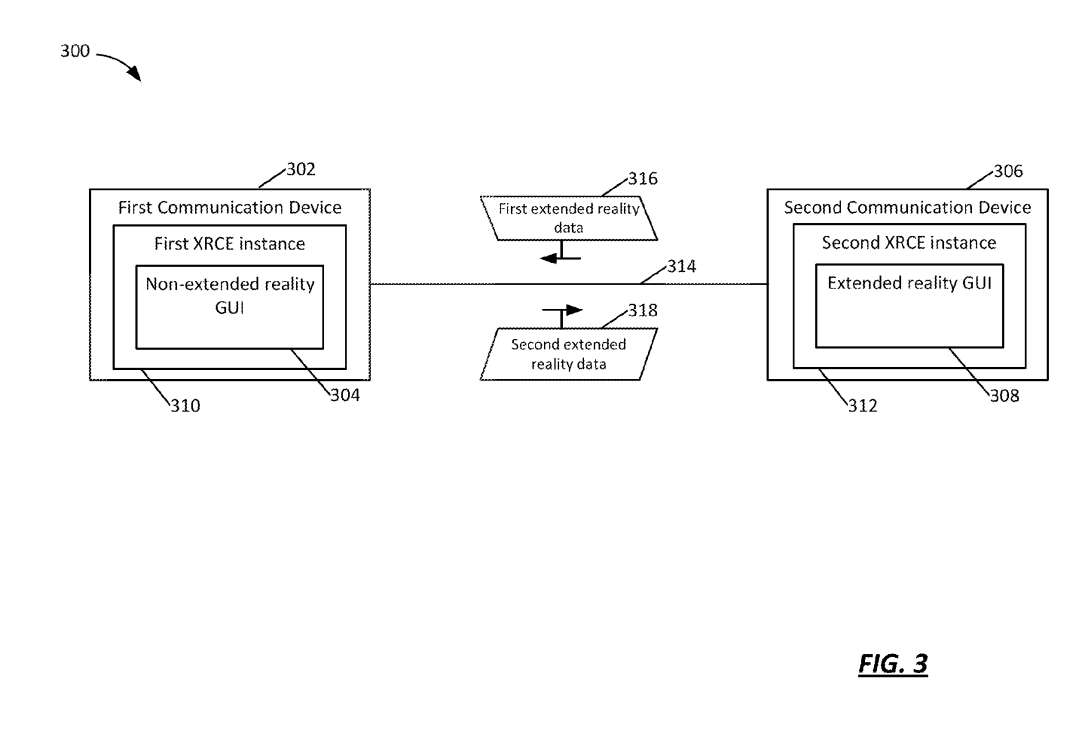

Figure 3 shows 300 communication environments. Users can access XR sessions using devices with various capabilities for accessing XR sessions. For example, a first user may use a first communication device 302 such as a laptop computer and access the XR session using a non-XR graphical user interface GUI 304. The second user may use 306 a second communication device, such as a headset, and access the XR session using the XR GUI 308.

Information describing the shared virtual elements of an XR session can be displayed based on a given device and can be adjusted based on the device's properties and capabilities.

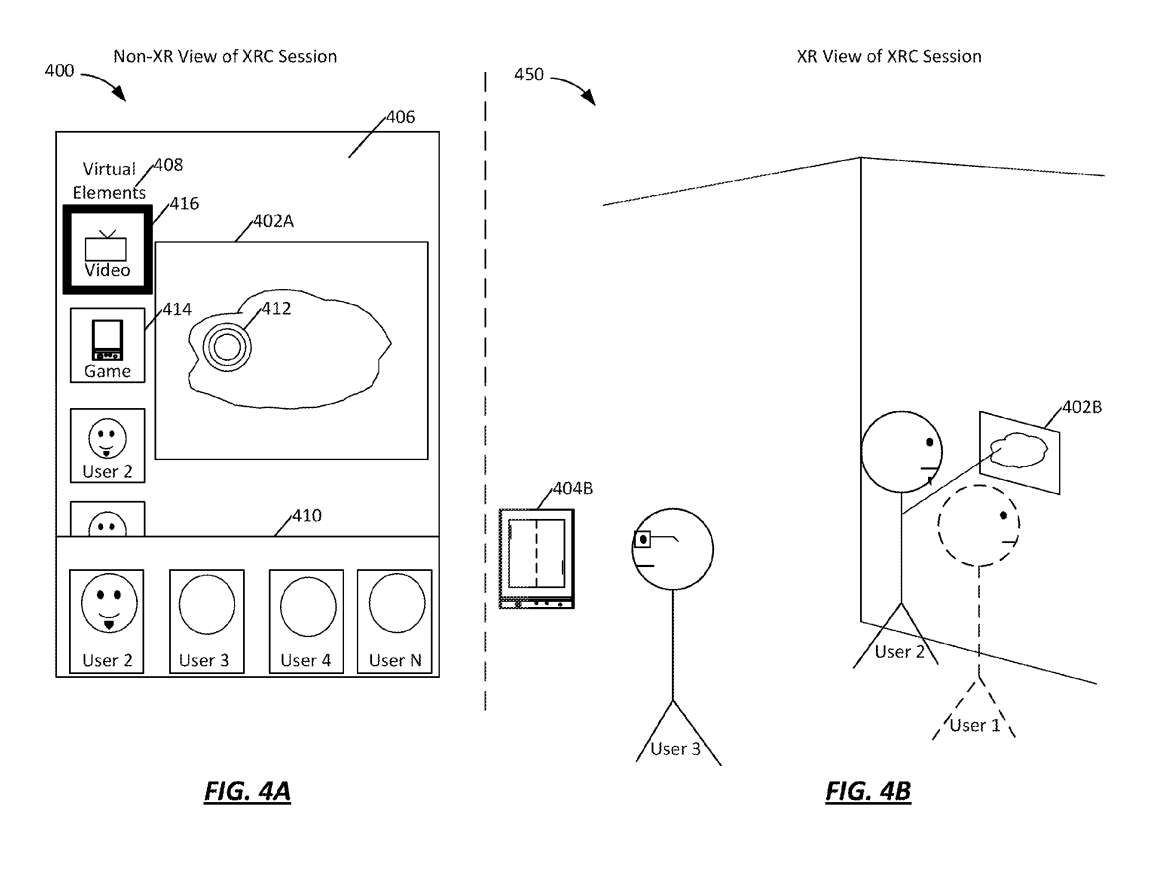

Figure 4A shows a non-XR first view 400 of an XR session, and Figure 4B shows a second view 450 of an XR same XR session.

Typically, in an XR session, the focus of the XR session is the content of the XR session. Scene graphs can encode shared virtual elements and then describe three-dimensional relationships compared to shared virtual elements to enhance participants' ability to interact with XR session content.

The same scene graph can be used to render the first view 400 and the second view 450. For example, assuming the views are from the same POV, a first device unable to display an XR view can display the view shown in the first view 400 based on the scene graph, while a device capable of displaying an XR view can display a second view 450 based on the scene graph view shown in .

As another example, the same scene graph could include an or virtual object to describe the virtual objects, as well as their respective locations. The first device may be displayed in a list in 408 using all or a subset of the virtual objects in the virtual element UI, while the second device may be displayed in a second view 450 . Devices capable of participating in an XR session can include a feature mapping layer to help the device determine how to render elements described in the scene graph.

Feature mapping layers can be used to transform various aspects of the device scene graph. Feature mapping layers can be specific to a device or device type and can define how a device renders shared virtual elements of an XR conversation.

The rendering of shared elements in a device depends on the device's capabilities. For example, a headset can display a 3D representation of an XR conversation, and a feature mapping layer can interpret this. The headset can then be configured to present a view of the XR session, and virtual elements can be shared spatially arranged around the 3D setting of the XR session. Users of the headset system can then share different virtual elements (such as video elements 402B), game elements 454, user 1, and perform spatial movement between user 2 and user 3.

Tablets and smartphones can be displayed in the 3D setting of an XR session using a 2D, non-XR view, flattening the space of the XR session. The corresponding feature mapping layer may instruct the device to render a 3D representation of the 3D setting and then generate a 2D view 406 of the 3D setting via rasterization, light tracing, or other means to display a 2D second view 450 .

In this case, User 1's viewpoint 406 position corresponds to User 1's Avatar position in the XR session 3D setting. When User 1 interacts with video element 402A in view 406, User 1's Avatar views the corresponding video element 402B in the XR3D setting.

In the second view of XR, users can point to, highlight or interact with shared virtual elements. In this example, User 2 interacts with video element 402B. For example, when User 2 touches video element 402B, the relative position relative to video element 402B can be determined and the relative position encoded in the scene graph. Compared to virtual elements, interactions translate across devices but differ in resolution, aspect ratio, size, etc.

In embodiments, the non-XR view into an XR session is more limited than the XR view, and when using the non-XR view, it may be more difficult to see other users in the XR session, as well as other users who may be interacting with the same virtual elements.

To help resolve these issues, non-XRE views can include a UI element to indicate other users participating in an XR session.

The focus of XR sessions is often on shared virtual elements, and feature mapping includes alternative ways of representing specific shared virtual elements rather than around a 3D setting in space. In embodiments, shared virtual elements with which the user interacts in the XR session may be determined from the scene graph. Interactive shared virtual elements may be collected and displayed in the 2D second view 450 .

By collecting and displaying interactive shared virtual elements, users can more easily find virtual elements they might interact with during an XR session.

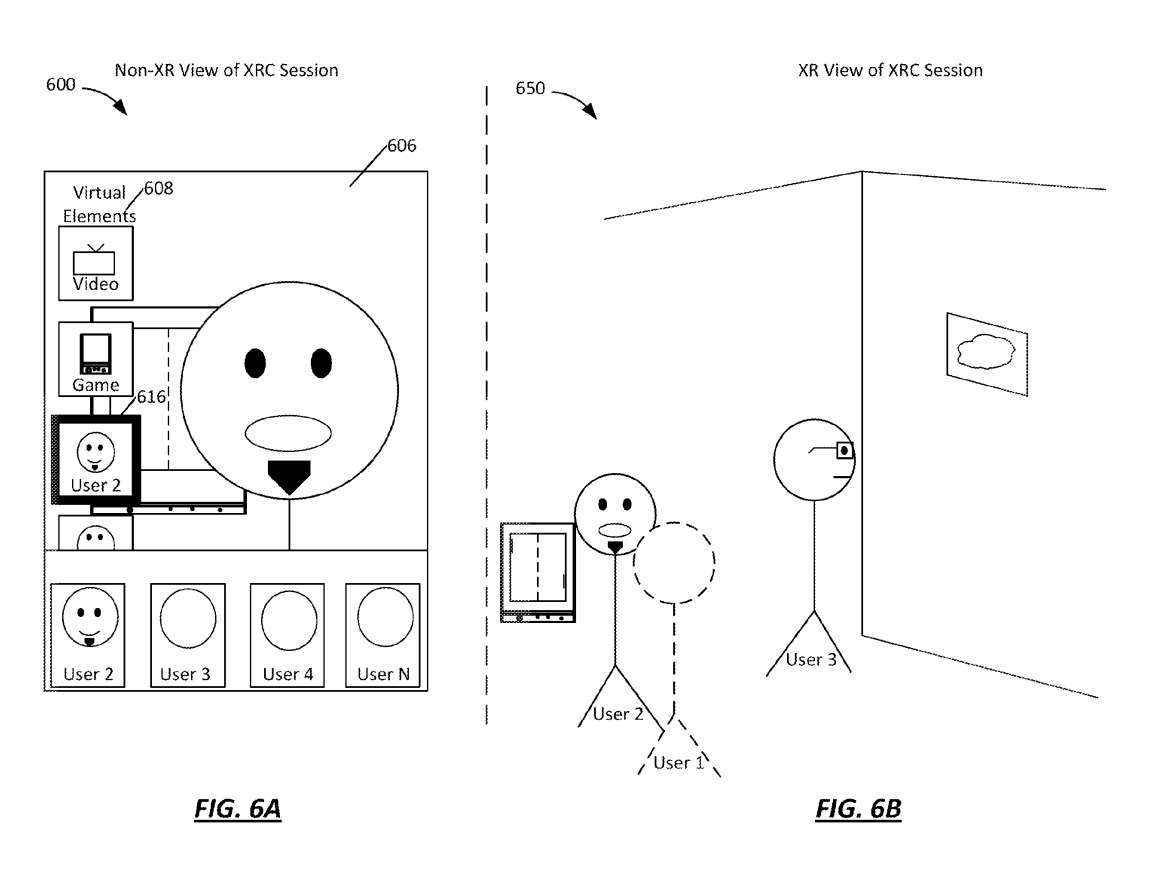

In one embodiment, a user can interact with another user using virtual elements UI. Referring to FIG. 6 , User 1 may select User 2 from virtual element 608 . In response, view 606 displayed in first view 600 may be moved to user 2 and user element 616 may be highlighted based on the selection.

In one embodiment, User 2 may be given the option to approve the interaction before moving view 606 . In the second view 650, User 1's Avatar can be moved to face User 2's Avatar.

You can capture different motion data for each user. For example, User 1 can participate in an XR conversation via a tablet device containing a camera and/or dot projector. Images captured by cameras and/or dot projectors can be analyzed to generate motion data, such as a tablet device generating the user's face.

Participating devices can be configured to collect information from a variety of sensors and generate motion data in a common format from the relevant information. Another participating device can receive the motion data and use the motion data to animate the Avatar corresponding to the first user.

In embodiments, a user accessing an XR session through an XR view can initiate an interaction. For example, User 2 might try to interact with User 1 and turn their Avatar towards User 1, press a button, try to touch User 1's Avatar, etc. When User 2 initiates an interaction with User 1, User 1's perspective 606 may move to a position facing User 2 and highlight the user element 616 in the virtual element UI 608 corresponding to User 2.

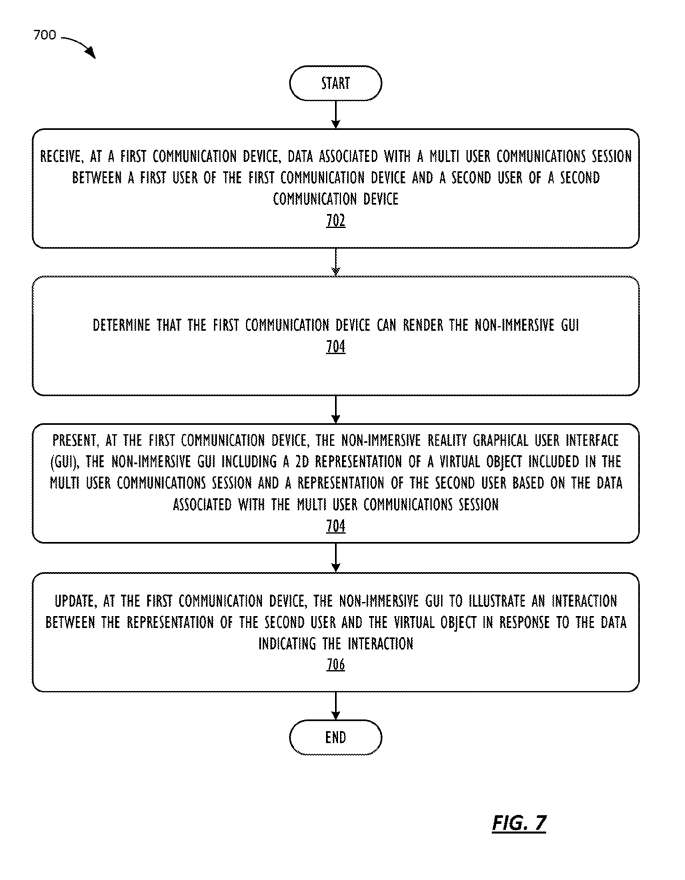

Figure 7 shows a first technique 700 for interaction in a multi-user communication session.

At 702, the first communication device receives data related to the multi-user communication session and the first user of the first communication device and the second user of the second communication device, wherein the first data related to the multi-user communication session is configured as an XR graphical user interface or Non-immersive GUI.

At 704, the first communications device determines that the first communications device can present a non-immersive GUI. For example, the non-XR device may determine that the non-XR device supports the non-XR view of the XR conversation, or that the non-XR device does not support the XR view of the XR conversation.

At 706, the first communications device presents a non-XR GUI that includes non-XR representations of virtual objects included in the multi-user communications session and a second user representation based on data related to the multi-user communications session. For example, a non-XR device can display a non-XR view of an XR session.

Non-XR views can contain virtual elements UI and represent virtual elements with which users can interact, such as XR applications, other users, and other interactive virtual elements.

In embodiments, the first technique 700 may include a second item of data related to a multi-user communication session. For example, an XR device can participate in an XR session and receive data from the XR session.

In embodiments, the data may include a scene graph describing elements of the virtual environment and XR session. The second communication device may present an XR representation of the virtual object based on the second data and an XR GUI of the Avatar to the first user in the second user environment.

The second communication device can update the XR GUI, and the second interaction between the Avatar and the virtual object is interpreted in response to the second data indicating the second interaction.

In one embodiment, image data may be captured in the first communications device. For example, one or more cameras of the first device may capture image data. The first communications device may generate motion data describing the first user's motion based on the image data.

For example, images captured by a camera can be analyzed to generate motion data. The first communication device can transmit the motion data to the second communication device. The second communication device may receive the motion data and animate the first user's Avatar based on the motion data.

In embodiments, the first communications device may update the non-XR GUI in order to account for the representation that the second user is disengaged from the virtual object and to indicate that the second user is no longer interacting with the object.

In an embodiment, the non-immersive GUI is based on a scene graph, including a perspective showing a view of a multi-user communication session, a list of virtual objects in the multi-user communication session, and an indication of other users in the multi-user communication session. The XR GUI is also based on scene graphs and includes three-dimensional views in multi-user communication sessions.

Related patents:Apple Patent | Asymmetric presentation of an environment

Titled “Asymmetric presentation of an environment,” Apple’s patent application was originally filed in March 2023 and was recently published by the U.S. Patent and Trademark Office.

Related posts