Excerpts from new AR/VR patent applications filed by the U.S. Patent Office on November 24, 2023

Article related citations and references:XR Navigation Network

A total of 51 patents have been updated.

(XR Navigation Network November 24, 2023) Recently, the U.S. Patent and Trademark Office announced a batch of newAR/VRPatents, the following is compiled by the XR Navigation Network (please click on the patent title for details), a total of 51 articles. For more patent disclosures, please visit the patent section of XR Navigation Networkhttps://patent.nweon.com/To search, you can also join the XR Navigation Network AR/VR patent exchange WeChat group (see the end of the article for details).

In one embodiment, the method described in the patent may use visual and inertial measurements and factory-calibrated external parameters of the sensors as inputs; perform beam adjustments and use a nonlinear optimization of the estimated state constrained by the measured and factory-calibrated external parameters; may jointly optimize the inertial constraints, the IMU calibration, and the camera calibration; and the outputs of the process may include the most probable estimated state, such as 3D of the environment mapped data, device trajectories, and/or updated external parameters from vision and inertial sensors.

2. "Magic Leap Patent | Insert For Augmented Reality Viewing Device》

In one embodiment, the patent describes a visual perception device. Visual perception devices have corrective optical components for perceiving virtual reality world and real-world content. The patent also describes inserts that can be used to correct optical components, including magnetic components, pins, and/or nosepieces. The nose piece is height adjustable and adapts to different users.

In one embodiment, the head-mounted display system described in the patent may include a head-mountable frame, a light projection system configured to output light to provide image content to a user's eyes, and a waveguide supported by the frame. The waveguide may be configured to direct at least a portion of the light from the light projection system coupled into the waveguide to present image content to the user's eyes. The system may include a grating including a first reflective diffractive optical element and a second reflective diffractive optical unit. The combination of the first and second reflective diffractive optical elements may operate as a transmissive diffractive optical element. The first reflective diffractive optical element may be a volume phase holographic grating. The second reflective diffractive optical element may be a liquid crystal polarizing grating.

In one embodiment, the patent describes an example of forming a waveguide portion with a predetermined shape. The photocurable material is dispensed into the space between the first mold portion and a second mold portion opposite the first mold portion. The relative spacing between the surface of the first mold portion relative to the surface of the second mold portion is adjusted to fill the space between the first and second mold portions. The light-curing material is irradiated with radiation to form a cured waveguide film, so that different parts of the cured waveguide film have different stiffnesses. The cured waveguide film is separated from the first and second mold parts. The waveguide portion is separated from the cured waveguide film.

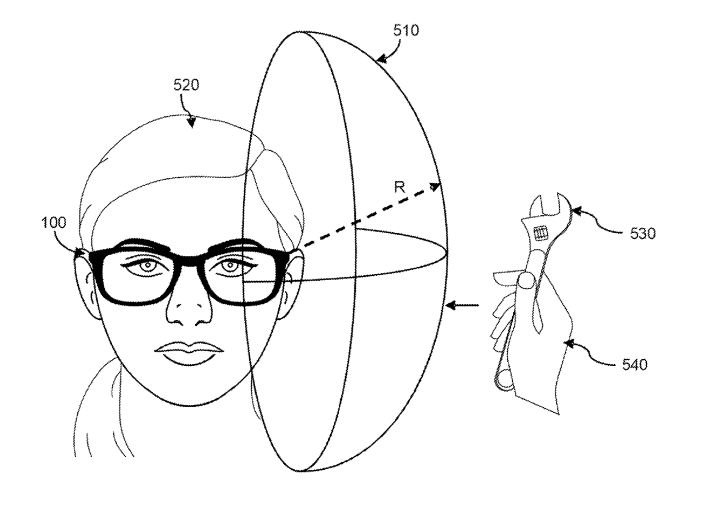

In one embodiment, based on the tracked positioning of the user's eyes, the system can adjust the light entering the user's eyes to control the image shell generated at the user's eyes. Image shell refers to the way that light entering the eye is focused on the retina of the eye. Example properties of image shells include image shell center, image shell curvature, image shell shape, etc. Users can focus on objects in the artificial reality environment, and light from the objects can generate image shells at the user's eyes. Image shells at the user's eyes can affect the user's vision and/or eye biology.

In one embodiment, the method described in the patent may include establishing a virtual boundary for a virtual world environment with reference to a real world environment and determining whether the virtual boundary requires correction; providing an alert in response to determining that the virtual boundary requires correction, and responding to the alert by Receive a request to modify the virtual boundary; in response to the request from the user, monitor the direction of the directional indicator to generate directional data, and modify the virtual boundary based on the directional data.

In one embodiment, the patent describes a method that dynamically controls the optical conditions presented to the user by an artificial reality system based on monitored parameters of the user's visual experience. For example, artificial reality rendering to the user can be tracked to monitor visual experience parameters such as light properties (e.g. color), focal length, virtual object properties (e.g. object/text color, size, etc.), background's gathered defocus distance, brightness, and Other suitable conditions. The optical conditions presented/displayed by the artificial reality system can be changed based on the monitoring by changing the focal length of the virtual object, text size, text/background color and other suitable optical conditions.

In one embodiment, the manufacturing method described in the patent occurs according to a programmed knitting sequence for a multi-dimensional knitting machine. The method includes providing a non-woven structure to a multi-dimensional knitting machine at a point in time when the fabric structure has a first knitted portion, wherein the first knitted portion is formed based on a first type of knitting pattern. Said concurrently includes, after providing the non-knitted structure, following a programmed knitting sequence to automatically adjust the multi-dimensional knitting machine to use a second type of knitting pattern different from the first type of knitting pattern to accommodate the non-knitted structure in within a second knitted portion adjacent the first knitted portion within the fabric structure.

In one embodiment, the electrically conductive deformable fabric has electrically conductive traces, the electrically conductive traces have a non-extensible fixed length along the first axis, and the electrically conductive traces are sewn into the fabric structure to create the electrically conductive deformable material. The fabric structure includes a stitch pattern that facilitates the conductive traces to expand and fold in an oscillatory manner to allow the conductive traces to expand and contract, respectively, along the first axis without exceeding a fixed length of the conductive traces. The conductive deformable material is positioned within the wearable device such that when the wearable device is worn, the stitch pattern is over the user's joints to allow the stitch pattern to expand or contract as the joint moves.

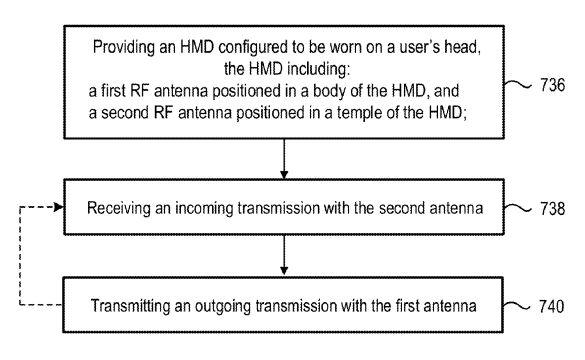

In one embodiment, the patent describes an electronic device having a frame configured to be worn on a user's head. The body is configured to support the lens. The electronic device includes a processor located within a frame and an RF antenna located within the body and in data communication with the processor.

In one embodiment, the patent describes a method for collecting telemetry data to update a 3D map of an environment, the method comprising performing a first relocalization event, wherein: data from an attitude tracker observation at time t1 is Calculate the first 3D mapping posture of the posture tracker, and receive the first local posture of the posture tracker at time t1. A second relocalization event occurs, in which a second 3D mapping pose of the pose tracker is calculated based on data from the observations at that time, and a second local pose of the pose tracker at time t2 is received. A first relative pose between the first and second 3D mapping poses is calculated. A second relative pose between the first and second local poses is calculated. The residual, which is the difference between the first relative pose and the second relative pose, is stored as input to the process for updating the 3D map.

In one embodiment, the system described in the patent is configured to control the transition and display of interface objects that selectively move across boundary transitions of a physical display screen within an augmented reality scene. When the virtual object instance of the interface object moves into the bounded area of the physical display screen within the augmented reality scene, the corresponding real world object instance of the interface object is generated and presented within the bounded display area of the display screen. When user input is received to move a real-world object instance of an interface object outside of the bounded display area of the display screen within the augmented reality scene, a corresponding virtual object instance of the interface object is generated and presented in the augmented reality scene outside the display.

In one embodiment, the patent describes a method that includes a high field rate display configured to display a field of rendered frames at a field rate; receiving a stream of rendered frames for display on the high field rate display; Early-stage reprojection is applied to rendered frames of the rendered frame stream; late-stage reprojection is applied to fields of rendered frames at the field rate. Among them, early reprojection uses more computing resources than late reprojection.

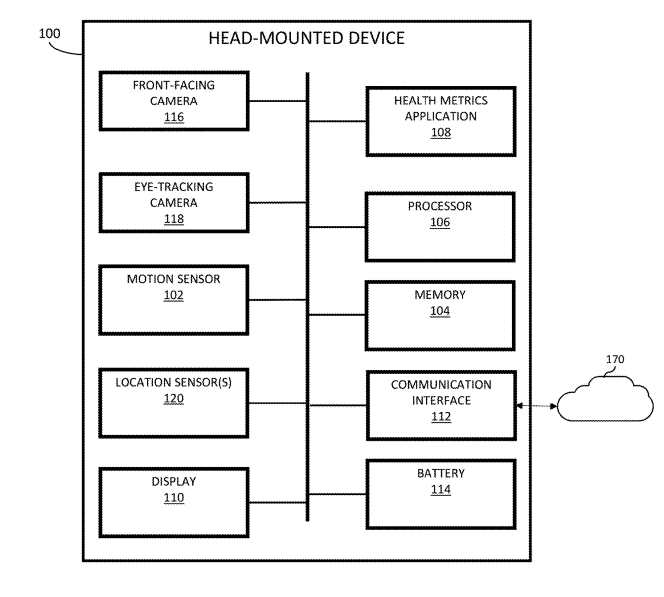

In one embodiment, the patent describes an apparatus that includes a frame, a motion sensor coupled to the frame, and a processor in communication with the motion sensor. The processor is configured by instructions to receive a motion signal captured by the motion sensor, determine when to extract features from the motion signal, extract features from the motion signal, generate a signal image based on the extracted features, and process the signal image to output one or more health indicators.

In one embodiment, a polarization mechanism for a head mounted device includes a linear polarizing film and a wave plate film configured to polarize ambient light before it is guided by the waveguide to the user's eyes. The waveplate film is simultaneously configured to receive reflections of polarized ambient light off the waveguide and to polarize the reflections in a circular direction such that the reflections are polarized in a first linear direction. The linearly polarizing film then receives the reflection from the wave plate film polarized in a first linear direction and polarizes the reflection in a second linear direction perpendicular to the first linear direction.

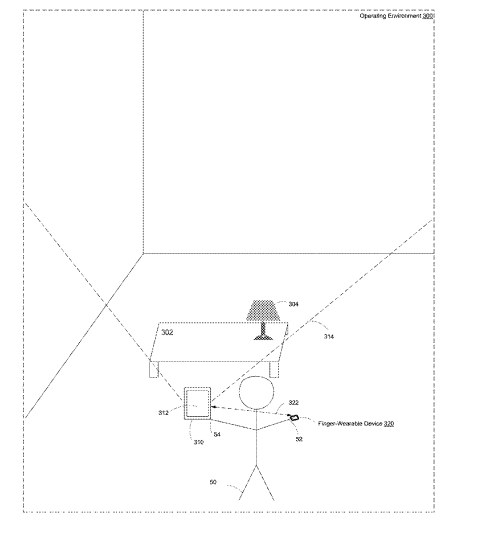

In one embodiment, the user can perform physical gestures to interact with the head mounted device. A fitness tracker worn on the user's wrist can be used to detect movements of the wrist as gestures, which are then communicated to the headset. Accessory-mediated gesture interactions can be interrupted when the accessory device loses power or otherwise stops tracking. In this case, the headset can take over gesture detection by monitoring the distortion of the magnetic field caused by the movement of the device. This form of gesture detection can be used when an active device drains its battery.

In one embodiment, the method described in the patent may include selecting an image from a set of images captured by a wearable device camera, identifying a region of interest in the image based on the location of the wearable device overlay display, determining characteristics of the region of interest, Characteristics of content to be presented on an overlay display are determined, the content is modified based on the characteristics of the region of interest and the characteristics of the content, and the modified content is presented on the overlay display.

In one embodiment, the patent describes a method that includes receiving image data of a plurality of objects of interest to a user, and receiving from the user a reference to the plurality of objects and requesting a digital assistant to identify the plurality of objects referenced by the query. Correlate color matching insights for queries. The method further includes processing the query and the image data to: identify, for each particular object among a plurality of objects referenced by the query, one or more corresponding colors for the particular object; Discriminative color matching insights associated with multiple objects. The method further includes generating content of the identified color matching insights associated with the plurality of objects.

19.《》

In one embodiment, the method described in the patent provides depth-aware image editing and interactive functionality. In particular, computer applications can provide image-related functionality. Among them, the image-related features utilize a combination of depth maps and segmentation data to process one or more images and generate edited versions of one or more images.

In one embodiment, the patent describes a projection lens optical system. The projection lens optical system is used in the projection device of the wearable device and includes a first lens, a second lens, a third lens, a fourth lens, a fifth lens and a sixth lens arranged in order from the emission area to the image plane.

twenty one. "Samsung Patent | Information generation method and device (Samsung Patent: Information generation method and device)》

In one embodiment, the information generation method and device described in the patent relate to the field of augmented reality technology. The method includes obtaining relative position information about the user relative to the target object in the virtual environment, determining sensory information corresponding to the target object based on relevant position information and attribute information about the target object, and converting the sensing information into electrical Signals are used to stimulate users through brain-computer interface devices.

In one embodiment, the patent describes a frequency multiplier for a wireless communication system. The frequency multiplier includes an input circuit to which a local oscillator (LO) signal is input, a multiplier circuit having one end connected to the input circuit and the other end connected to the lower end of the load circuit, the load circuit having an upper end connected to the voltage controller, and the voltage controller. The voltage controller is configured between the upper terminal of the load circuit and the input power supply, wherein the pressure controller may be configured to reduce the voltage between the input power supply and the upper terminal of the load current, and based on The upper terminal voltage of the load circuit re-inputs the feedback voltage to the voltage control device.

In one embodiment, the augmented reality device described in the patent adjusts the depth value based on the gravity direction measured by the IMU sensor to obtain a depth map with high accuracy without additional hardware modules. The augmented reality device obtains a depth map from an image obtained using a camera, obtains a normal vector of at least one pixel included in the depth map, modifies the direction of the normal vector of at least one pixel based on the gravity direction measured by the IMU sensor, and based on the modified method Vector direction adjusts the depth value of at least one pixel.

In one embodiment, the patent describes a method of segmenting a point cloud via an electronic device. The method includes receiving a point cloud including colorless data and/or featureless data; determining a normal vector of the received point cloud and/or a spatial feature of the received point cloud; based on one or more normal vectors and Segment the point cloud using at least one of one or more spatial features.

In one embodiment, the display system described in the patent can utilize polarization multiplexing, allowing for improved optimization of diffractive optics. Display systems can selectively polarize light based on wavelength or field of view. The optical combiner may include polarization-sensitive diffractive optical elements, each optimized for a color subset or portion of the entire field of view, thereby providing improved corrective optics for the display system.

In one embodiment, the augmented reality device applies a smoothing correction method to correct the position of virtual objects presented to the user. The AR device can apply an angle threshold to determine whether the virtual object can move from the original position to the target position. The angle threshold is the maximum angle that the line from the AR device to the virtual object can change within a time step. Similarly, AR devices can apply motion thresholds. In addition, the AR device can apply a pixel threshold to the correction of the position of the virtual object.

27.《Apple Patent | Biometric authentication system (Apple patent: biometric authentication system)》

In one embodiment, a device for biometric authentication may capture and analyze two or more biometric features or aspects, individually or in combination, to identify and authenticate a person. An imaging system captures images of a person's iris, eye, periorbital area, and/or other areas of a person's face and analyzes two or more features in the captured image, individually or in combination, to identify and authenticate people and/or Detect attempts to spoof biometric authentication. Embodiments may improve the performance of biometric authentication systems and may help reduce false positives through biometric authentication algorithms.

In one embodiment, the headset described in the patent may have left and right displays that provide corresponding left and right images. The left and right optical combiner systems can be configured to deliver real-world light to the left and right viewports while directing left and right images to the left and right viewports respectively. Misalignment of the left and right images relative to the left and right view windows can be monitored using a gaze tracking system, using a camera such as a front-facing camera combined with a database of known real-world object properties, etc. Compensatory adjustments to the image can then be made based on the measured misalignment.

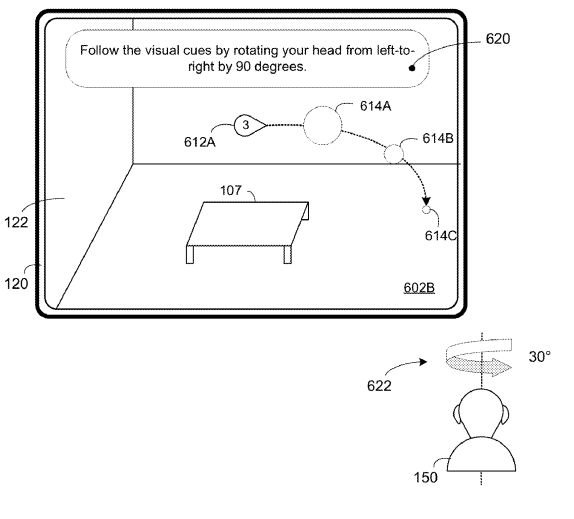

In one embodiment, the method described in the patent includes: obtaining a user profile and head pose information of a user while rendering a 3D environment; determining a method for guiding a stretching session based on the user profile and head pose information a location within the 3D environment of the first portion of the visual cue; presenting the visual cue and direction indicator at the determined location within the 3D environment; and responsive to detecting a change in the head pose information : updating a position of the directional indicator based on the change in the head posture information; and based on a determination that the change in the head posture information satisfies a criterion associated with a first of the visual cues, At least one of audio, tactile, or visual feedback is provided indicating that the first visual cue has been completed for the first portion of the guided stretching session.

30.《Apple Patent | Flexible illumination for imaging systems》

In one embodiment, the patent describes a method for flexible illumination to improve the performance and robustness of imaging systems. Several different lighting configurations for the imaging system are pre-generated. Each lighting configuration can specify one or more aspects of lighting. A lookup table can be generated that associates each pose with a corresponding lighting configuration. The user can put on, hold, or otherwise use the device. A process can be initiated in which the controller can select different lighting configurations to capture images of the user's eyes, periorbital area, or face in different poses and under different conditions for use in biometric authentication or gaze tracking processes.

In one embodiment, generating a three-dimensional virtual representation of a three-dimensional physical object may be based on capturing or receiving a set of images. Generating virtual representations of physical objects may be facilitated by a user interface for identifying the physical objects and capturing a set of images of the physical objects. Generating a virtual representation can include previewing or modifying a set of images. Generating a virtual representation of the physical object may include generating a first representation of the physical object and/or generating a second three-dimensional virtual representation of the physical object. A visual indication of the progress of the image capture process and/or the generation of the virtual representation of the three-dimensional object may be displayed, for example, in the capture user interface.

In one embodiment, generating a three-dimensional virtual representation of a three-dimensional physical object may be based on capturing or receiving a set of images. Generating virtual representations of physical objects may be facilitated by a user interface for identifying the physical objects and capturing a set of images of the physical objects. Generating a virtual representation can include previewing or modifying a set of images. Generating a virtual representation of the physical object may include generating a first representation of the physical object and/or generating a second three-dimensional virtual representation of the physical object. A visual indication of the progress of the image capture process and/or the generation of the virtual representation of the three-dimensional object may be displayed, for example, in the capture user interface.

33.《Apple Patent | Populating a graphical environment》

In one embodiment, the patent describes a method that includes obtaining a request to populate an environment with variations of an object characterized by at least one visual property; generating a representation of the object by assigning a corresponding value to the at least one visual property based on one or more distribution criteria. Changes; changes to an object shown in settings to meet rendering standards.

In one embodiment, generating a three-dimensional virtual representation of a three-dimensional physical object may be based on capturing or receiving a set of images. Generating virtual representations of physical objects may be facilitated by a user interface for identifying the physical objects and capturing a set of images of the physical objects. Generating a virtual representation can include previewing or modifying a set of images. Generating a virtual representation of the physical object may include generating a first representation of the physical object and/or generating a second three-dimensional virtual representation of the physical object. A visual indication of the progress of the image capture process and/or the generation of the virtual representation of the three-dimensional object may be displayed, for example, in the capture user interface.

In one embodiment, the method described in the patent includes: obtaining a first image of an environment from a first image sensor associated with a first intrinsic parameter; performing a warping operation on the first image according to a perspective offset value to produce a warped a first image to account for perspective differences between the first image sensor and a user of the electronic device; determining an occlusion mask based on the distorted first image including a plurality of holes; a second image sensor obtaining a second image of the environment; normalizing the second image based on a difference between the first and second intrinsic parameters to produce a normalized second image; and The normalized second image fills the first set of one or more holes of the occlusion mask to produce a modified first image.

36.《Apple Patent | Influencing actions of agents (Apple Patent: Influencing the behavior of agents)》

In one embodiment, the method described in the patent includes obtaining a first goal of a first agent by generating a first agent engine for an action of the first agent; generating a first goal of a second agent engine by the first agent engine. Influence, the second agent engine generates actions directed against the computer-generated reality (CGR) representation of the second agent; the first influence is based on the first agent's first goal; the CGR representation of the second agent is triggered to perform the first action that advances the first agent. A set of one or more actions for a target; the second agent engine generates the set of one or more actions based on the first influence generated by the first agent engine.

In one embodiment, the method described in the patent includes unobvious eye alignment of the user device through simultaneous or nearly simultaneous eye feature tracking and eye model updates. Light may be directed toward the user's eyes to produce a flicker reflection, and a depicted image frame including the flicker reflection obtained via one or more sensors. Based on the image frames, eye characteristics are tracked and an eye model of the eye is updated during a period of time between obtaining a first of the image frames and obtaining a second image frame of the image frames.

In one embodiment, the method described in the patent includes detecting one or more external devices; obtaining image data of the physical environment captured by an image sensor; determining whether the image data includes a first external device among the detected one or more external devices. Representation of the device; based on determining that the image data includes a representation of the first external device, causing the display to simultaneously display a representation of the physical environment according to the image data and an affordance corresponding to a function of the first external device.

In one embodiment, the patent describes a method that includes obtaining limb tracking data from a limb tracker; displaying a computer-generated representation of a trackpad spatially associated with a physical surface; and identifying elements within the computer-generated trackpad representation based on the limb tracking data. a first position; mapping the first position to a corresponding position within the content operation area; displaying an indicator indicating the mapping. Indicators may overlap with corresponding locations within the content manipulation area.

In one embodiment, the patent describes a facial interface for a head-mounted display worn on a user's head, the facial interface including an upper portion and a lower portion. The upper portion engages the upper area of the user's face above the eyes. The lower portion that engages the lower area of the user's face below the eyes. The lower part has a lower shear compliance greater than the upper shear compliance of the upper part.

In one embodiment, the head-mounted device described in the patent may have an optical module that presents images to the user's eyes. Each optical module may have a lens barrel with a display and a lens that presents an image from the display to a corresponding viewing window. To accommodate users with different interpupillary distances, the optical module may be slidably coupled to a guide member such as a guide rod. The actuator can slide the optical modules toward or away from each other along the guide rods.

In one embodiment, the patent describes a data processing apparatus comprising an input unit configured to receive data corresponding to at least a portion of a mesh, wherein said at least a portion of the mesh includes a plurality of vertices, wherein each vertex corresponds to a virtual location in space. The mesh simultaneously includes a plurality of polygons, where each polygon includes a circumference containing three or more lines, where each of the three or more lines intersects two of the plurality of vertices. The data processing device also includes a generating unit, which generates two or more seed points based on the received data in the first stage, where each seed point corresponds to a position in the virtual space, and generates in the second stage Two or more meshlets, where each meshlet includes at least a subset of the mesh. The data processing device also includes an output unit configured to output data corresponding to one or more of the generated grids.

43.《Sony Patent | Player selection system and method (Sony Patent: Player selection system and method)》

In one embodiment, the game selection system described in the patent includes an emotion processor configured to obtain a user's current emotional state; a descriptor processor configured to obtain one or more emotion descriptors associated with one or more games; an evaluation processor configured to predict the user's emotional outcomes for or for each game based on the user's current emotional state and one or more emotion descriptors of the respective games; and configured to predict the user's emotional outcomes for or for each game based on whether their respective emotional outcomes satisfy at least a first predetermined Standard and select one or more game selection processors.

In one embodiment, the first recording section records chat data as voice data associated with time information. The second recording section records metadata including information associated with time information indicating the person who has made the speech. The input image generation section generates input images for users to use in creating reports. Create a section that accepts user-entered information and creates chat-related reports.

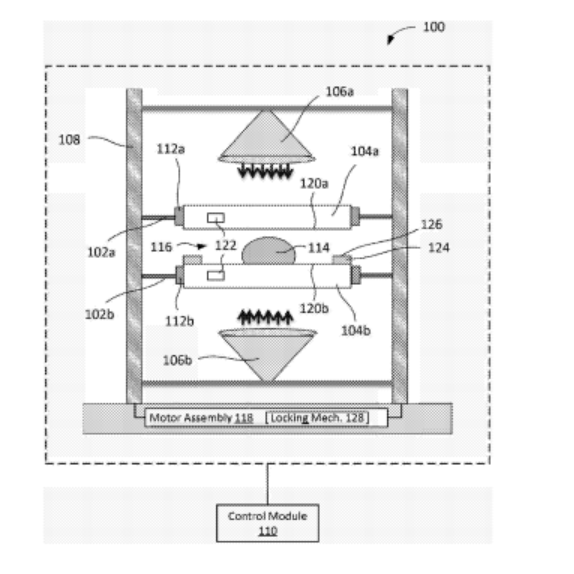

In one embodiment, the aroma chemical presentation device described in the patent includes a package support unit that supports an aroma chemical package that encapsulates aroma chemical materials, and a device that is in contact with the aroma chemical package to cause the aroma chemical materials in the aroma chemical package to emit. The action body is a derivation fan that forms an air flow in a predetermined direction to decompose the aroma-emitting chemical material.

In one embodiment, the patent describes an example apparatus for decoding point cloud data including: a memory configured to store point cloud data; and one or more processors implemented in circuitry and configured to: determine a number of times the reduced encoded representation of the geometry is upgraded; decoding the reduced encoded representation of the point cloud geometry; enlarging the reduced representation of the point cloud geometry the number of times to form the point cloud and geometry a magnified representation of the point cloud; and the point cloud is reproduced using the magnified representation of the point cloud geometry.

In one embodiment, the patent describes a system for asynchronous channel access control of wireless systems. A device can adjust the priority of one or more PPDUs and can perform other operations to ensure control of the wireless medium at a specific time while still allowing other devices to communicate on the wireless medium. For example, the device may adjust a backoff counter or one or more EDCA parameters to ensure that control of the wireless medium is obtained to send the first PPDU of the application file. For one or more subsequent PPDUs of the application file, the device may again adjust the backoff counter or one or more EDCA parameters to allow other devices to gain control of the wireless medium.

In one embodiment, the patent describes techniques for wireless communications by user equipment (UE). The techniques include receiving one or more configured grant (CG) configurations from a network entity, each configuration defining at least one CG occasion within a data period, obtaining traffic in one or more bursts, and obtaining traffic in said data The traffic is sent to the network entity in multiple physical uplink shared channels (PUSCH) in a periodic manner.

In one embodiment, third-party content may include one or more objects, and may include one or more visual effects related to the one or fewer objects. Augmented reality content may be generated that applies one or more visual effects to additional objects shown in the camera's field of view. Third-party content may correspond to one or more products available for purchase through the client application.

In one embodiment, the AR system generates text scene objects including virtual text objects based on text and detects text selection gestures made by a user of the AR system. The AR system generates selection lines based on the landmarks of the user's hands. The AR system detects a confirmation gesture made by the user and sets the text selection starting point at the intersection of the selection line and the starting virtual text object in one or more virtual text objects. The AR system detects subsequent confirmation gestures made by the user, sets the text selection end point, and selects the selected text from the text based on the text selection start point and the text selection end point.

In one embodiment, the patent describes a method for capturing multiple sequences of views of a three-dimensional object using multiple virtual cameras. The method generates aligned sequences from multiple sequences based on the arrangement of multiple virtual cameras relative to a three-dimensional object. Using a convolutional network, the system classifies three-dimensional objects based on aligned sequences and uses the classification to identify three-dimensional objects.

Related posts