Quest 3的开发人员现在可以整合三项重大新功能,以改善虚拟和混合现实体验。

Quest 3 announced the December launch of Inside-Out Body Tracking (IOBT) and the subsequent release of Generative Legs. Prior to this, Mixed Reality's Occlusion via Depth API had been experimental, meaning developers could test it but not release it in the Quest Store or App Lab builds.

All three features are available as part of the v60 SDK for Unity and native code. Meanwhile, the v60 Unreal Engine integration includes the Depth API, but not IOBT or generative Legs.

Inside-Out Upper Body Tracking

Inside-Out Body Tracking (IOBT) uses the Quest 3's side-mounted camera, which faces downward and uses advanced computer vision algorithms to track wrists, elbows, shoulders and torsos.

IOBT avoids the arm problem of inverse kinetics (IK) estimation, since making only guesses from head and hand positions is often inaccurate and uncomfortable. When a developer integrates IOBT, you will see the arms and torso in their actual position, rather than an estimated position.

This upper body tracking also allows developers to position the thumbsticks in the direction of your body, not just your head or hands, and you can perform new actions such as leaning over the edge of a cliff and displaying them in a realistic form in your character model.

AI Generative Legs

IOBT仅适用于你的上半身。为了下半身,Meta推出了Generative Legs。

Generative Legs uses "cutting-edge" AI models to estimate the position of your legs - a technique the company has been working on for years.

On Quest 3, Generative Legs provides more realistic estimates than previous headsets due to the use of upper body tracking as an input, but can also be used on Quest Proand Quest 2 on which only the head and hands are used.

The Generative Legs system is only an estimator, not a tracker, so while it can detect movements such as jumping and crouching, it won't capture many of the actual movements, such as lifting a knee.

Built-in upper body tracking and Generative Legs allow for a believable full body in virtual reality, called Full Body Synthesis, without the need for external hardware.

Meta previously announced that full-body synthesis will be used in Supernatural, Swordsman VR, and Drunken Bar Fight.

Depth API For Occlusion In Mixed Reality

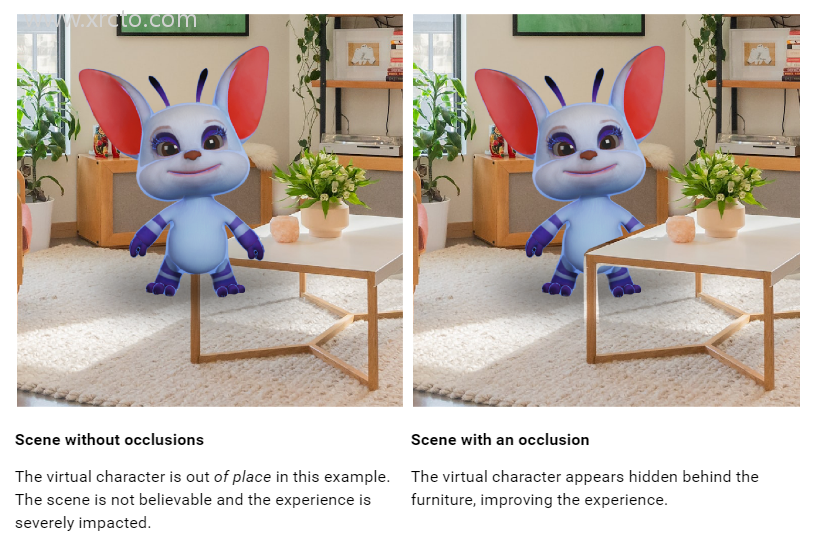

In our Quest 3 review, we heavily criticized the lack of mixed reality dynamic occlusion. Virtual objects can appear behind a spatial grid, but they always show up in front of moving objects (such as arms and other people), even at greater distances, which looks uncomfortable and unnatural.

Developers can implement dynamic occlusion of the hand using a hand tracking mesh, but few do because it is limited to the wrist, so the rest of the arm is not included.

The new Depth API provides developers with a rough depth map of each frame generated by the head-mounted display from its point of view. This can be used to implement occlusion, including more detailed occlusion of moving and static objects, as these may not be captured in the scene mesh.

Dynamic occlusion should make mixed reality on the Quest 3 look more natural. However, the depth-sensing resolution of the head-mounted display is so low that it won't capture the space between your fingers and you'll see a gap at the edge of objects.

Depth maps are only recommended to be used up to 4 meters away, after which "accuracy decreases significantly," so developers may also want to use the scene grid for static occlusion.

Developers can implement occlusion in two ways: hard and soft. Hard implementations are essentially free, but will have jagged edges, while soft implementations require GPU costs, but are much more effective. Looking at Meta's example clip, it's hard to imagine any developer choosing hard occlusion.

But in either case, occlusion needs to be implemented either using Meta's special occlusion shader or specifically in a custom shader. This is far from a one-click solution, and it can take a huge amount of work for developers to support this feature.

In addition to occlusion, developers can use the Depth API to implement depth-based visual effects in mixed reality, such as fog.

You can find it hereUnity's DocumentationandUnreal's documentation.