Excerpts from New AR/VR Patent Filings at the USPTO on December 30, 2023

Article related citations and references:XR Navigation Network

(XR Navigation Network 2023年12月30日) Recently, the U.S. Patent and Trademark Office announced a batch of newAR/VRPatents, the following is compiled by the XR Navigation Network (please click on the patent title for details), a total of 51 articles. For more patent disclosures, please visit the patent section of XR Navigation Networkhttps://patent.nweon.com/To search, you can also join the XR Navigation Network AR/VR patent exchange WeChat group (see the end of the article for details).

1. "Meta Patent | Dynamic speech directivity reproduction》

在一个实施例中,专利描述的方法可以包括经由扬声器的人工现实设备的头戴式麦克风捕获在人工现实环境中与听者通信的扬声器的语音输入;检测扬声器在人工现实环境内的姿势,以及确定扬声器相对于听者在人工真实环境内的位置的位置;基于扬声器在人工现实环境内的姿势和相对位置来处理语音输入,以创建用于听者的方向性调谐语音信号,并将方向性调谐的语音信号传送到收听者的人工现实设备。

2. "Meta Patent | Methods and systems of performing low-density parity-check (ldpc) coding》

In one embodiment, a system and method for performing low density parity check (LDPC) coding may include, a wireless communication device that determines a count of a plurality of information bits. The wireless communication device may select a code word length based on the count. An LDPC encoder of the wireless communication device may generate a code word for the plurality of information bits. The wireless communication device may send the code words to an LDPC decoder of another wireless communication device.

3.《Meta Patent | Etch protection and quantum mechanical isolation in light emitting diodes》

In one embodiment, the patent describes LED devices and corresponding fabrication techniques. the LED device includes a first doped semiconductor layer, a second doped semiconductor layer having opposite doping, and a 2D array of light emitting units. the 2D array is disposed between the first doped semiconductor layer and the second doped semiconductor layer. Each light emitting unit corresponds to a single pixel table surface and includes at least one quantum well. the 2D array is disposed between the first doped semiconductor layer and the second doped semiconductor layer. the LED device also includes a flattening layer between the first doped semiconductor layer and the 2D array. The planarization layer includes an undoped quantum barrier (QB) layer that completely covers a sidewall of each light emitting unit in the 2D array. The undoped QB layer quantumly isolates the light emitting units from each other. The flattened or undoped QB layer also protects the light-emitting units from etch-induced defects during the benchtop pixelization process.

4.《Meta Patent | Personalized gesture recognition for user interaction with assistant systems》

In one embodiment, a method described in the patent comprises receiving a user request from a first user from a client system associated with the first user, wherein said user request comprises gesture input from said first user and voice input from said second user; determining an intent corresponding to said user request based on gestures input by a personalized gesture classification model associated with said first user; performing one or more tasks based on the determined intent and said voice input; and sending to said client system, in response to said user request, instructions for presenting the results of the performance of said one or more tasks.

5.《Meta Patent | Virtual personal interface for control and travel between virtual worlds》

In one embodiment, providing a user interface for interacting with a current XR application, providing a detailed view of selected items, navigating between a plurality of virtual worlds without having to switch back and forth between the home halls of those worlds, executing a second XR application within a world controlled by the first XR application, and providing 3D content separate from the current world.The XR application may be defined to be used with a personal interface to create 3D world portions and 2D interface portions that are displayed via the personal interface.

6.《Meta Patent | Virtual personal interface for control and travel between virtual worlds》

In one embodiment, providing a user interface for interacting with a current XR application, providing a detailed view of selected items, navigating between a plurality of virtual worlds without having to switch back and forth between the home halls of those worlds, executing a second XR application within a world controlled by the first XR application, and providing 3D content separate from the current world.The XR application may be defined to be used with a personal interface to create 3D world portions and 2D interface portions that are displayed via the personal interface.

7.《Meta Patent | Three-dimensional face animation from speech》

In one embodiment, the patent describes a method of training a 3D model face animation model based on speech. Said method comprises determining a first correlation value for facial features based on audio waveforms from a first object; generating a first mesh of a lower portion of a face based on the facial features and the first correlation value; updating said first correlation value when the difference between said first mesh and a real ground truth image of said first object is greater than a preselected threshold; and Providing a three-dimensional model of a face animated by speech to an immersive reality application accessed by a client device.

8. "Meta Patent | Browser enabled switching between virtual worlds in artificial reality》

In one embodiment, the system described in the patent is directed to a virtual Web browser for interchangeably providing access to a plurality of virtual worlds. Browser tabs for corresponding pairs of web sites and virtual worlds may be displayed with associated controls, the selection of such controls affecting the instantiation of 3D content of the virtual world. As a result of an interaction with an object in the virtual world, one or more tabs may be automatically generated, thereby facilitating travel to the world corresponding to the object to which the interaction refers.

9. "Meta Patent | Lighting unit with zonal illumination of a display panel》

In one embodiment, an illumination unit for a display panel includes a light distribution module, a coupler array, and a beam spot array generation module. The light distribution module includes a plurality of output waveguides for configurably distributing the illumination light among the output waveguides. Each coupler is coupled to the output waveguides and configured to couple out a portion of the illumination light for propagation toward the display panel. A beam spot array generation module is configured to receive the portions of the illuminated light coupled out by the couplers and to convert each portion to illuminate an area of the display panel utilizing the illuminated light field including the array of beam spots. Each beam point is configured to illuminate a single pixel or sub-pixel of the area of the display panel.

10.《Meta Patent | Adjusting interpupillary distance and eye relief distance of a head-up display》

In one embodiment, the patent describes a system that determines a user's interpupillary distance and a user's adapted eye distance. The system may cause the adjustment component to adjust a first distance between the first lens and the second lens along a first axis based at least in part on the interpupillary distance, and cause the adjustment component to adjust a second distance of the optical component along a second axis.

11.《Meta Patent | Optical assemblies, head-mounted displays, and related methods》

In one embodiment, the optical assembly described in the patent may include a projector assembly, a waveguide, and at least two standoffs. The projector assembly may include at least one light projector and a housing. The waveguide may include at least one input grating corresponding to the at least one light projector. The standoffs may be between the housing and the waveguide and may be configured for aligning the at least one light projector with the at least one input grating.

12.《Meta Patent | Adjusting interpupillary distance and eye relief distance of a head-up display》

In one embodiment, the patent describes a system that determines a user's interpupillary distance and a user's adapted eye distance. The system may cause the adjustment component to adjust a first distance between the first lens and the second lens along a first axis based at least in part on the interpupillary distance, and cause the adjustment component to adjust a second distance of the optical component along a second axis.

13.《Meta Patent | System and method for fabricating polarization selective element》

In one embodiment, the system described in the patent includes a surface undulation grating configured to forward diffract an input beam into two linearly polarized beams. The described system further includes a waveplate optically coupled to the surface undulation grating and configured to convert the two linearly polarized beams into two circularly polarized beams having orthogonal circular polarizations. The two circularly polarized beams with orthogonal circular polarization interfere with each other to produce a polarized interference pattern.

14.《Microsoft Patent | Determining charge on a facial-tracking sensor》

In one embodiment total, the patent describes a method of determining capacitance based on charge accumulated on a sense capacitor electrode. A head-mounted device includes a face tracking sensor, a controller, and charge sensing circuitry connected to the face tracking sensor. Said face tracking sensor includes a sense capacitor electrode and a controller, said sense capacitor electrode configured to be positioned near a facial surface and to form a capacitance based on a distance between said sense capacitor electrode and said facial surface, said controller configured to apply a reference voltage to said sense capacitor electrode. A charge sensing circuit is configured to determine the capacitance of the sense capacitor electrode by determining the amount of charge accumulated on the sense capacitor electrode caused by the reference voltage.

15.《Microsoft Patent | Gesture recognition based on likelihood of interaction》

In one embodiment, the patent describes a method for evaluating gesture inputs, comprising receiving input data for a sequence of data frames. A first neural network is trained to recognize features indicative of subsequent gesture interactions and is configured to evaluate the input data for the sequence of data frames while outputting an indication of a likelihood that the user will perform a gesture interaction during a predetermined window of the data frames. The second neural network is trained to recognize features indicative of whether the user is currently performing one or more gesture interactions, and is configured to adjust parameters for gesture interaction recognition during the predetermined window based on the indicated likelihood. The second neural network evaluates the gesture interaction performed in the predetermined window based on the adjusted parameters and outputs a signal regarding whether the user is performing the one or more gesture interactions during the predetermined window.

16.《Microsoft Patent | Pixel-based automated testing of a navigable simulated environment》

In one embodiment, the patent describes a computing system for automated pixel-based testing of a navigable simulation environment. The processor is configured to execute an application testing program during a runtime inference phase. The application testing program includes a machine learning model, the model trained to detect errors in the application under test. Screen images of the simulated environment are processed by an object detection module to determine whether critical objects are present in the screen images. If a critical object is present in the screen image, the application test program executes the object investigation module to generate investigation inputs to investigate the critical object. If the critical object is not present in the screen image, the application test program executes the environment exploration module to generate environment exploration actions to be provided to the application under test as simulated user input.

17.《Apple Patent | Method and system for acoustic passthrough》

In one embodiment, the patent describes a method performed by a first head-up display worn by a first user. Said method comprises the first head-up display performing noise cancellation on a microphone signal captured by a microphone, wherein the microphone signal is arranged to capture sound within a surrounding environment in which the first user is located. The first head-up display receives, via a wireless communication link, from a second head-up display worn by a second user who is in the surrounding environment, at least one acoustic characteristic of the sound generated by at least one sensor of the second head-up display, and via at least one acoustic characteristic of the selected sound based on the received acoustic characteristic of the microphone signal.

18.《Apple Patent | Gaze-based exposure》

In one embodiment, the patent describes a processing pipeline and method for a mixed reality system. Said method uses selective automatic exposure for regions of interest in a scene based on gaze, and compensates for exposure of the rest of the scene based on ambient lighting information of the scene. An image for display can then be generated which provides the user with an exposure-compensated high dynamic range (HDR) experience.

19.《Apple Patent | Displays with viewer tracking for vertical parallax correction》

In one embodiment, the electronic device described in the patent may include a stereoscopic display, the stereoscopic display having a plurality of biconvex lenses extending over a length of the display. The biconvex lenses may be configured to enable stereoscopic viewing of the display to enable a viewer to perceive a three-dimensional image. The display may have different viewing areas, and the viewing areas take into account horizontal parallax as the viewer moves horizontally relative to the display. Based on the detected vertical position of the viewer, the display may be globally dimmed, blurred, and/or composited with a default image. The display may present content that compensates for the real-time vertical position of a plurality of viewers.

20. The

In one embodiment, the patent describes a media editing method and a graphical user interface. The media editing user interface includes a plurality of user interface options and tools for capturing and editing media generated by a plurality of media recording devices; modifying a state of a media recording device to add corresponding content to a media stream; the media editing user interface includes a representation of the directed media content from the corresponding media recording device; and the media editing user interface may present controls for altering the content of the media stream as well as publishing and/or export properties of the media stream; the media editing user interface may present controls for changing the content of the media stream and properties of the published and/or exported media stream.

twenty one. "Apple Patent | Visual indication of audibility》

In one embodiment, the patent describes a method for displaying a visual indication of audibility of an audible signal. Said method includes receiving an audible signal via an audio transducer and converting the audible signal to electronic signal data; obtaining environmental data indicative of audio response characteristics of a physical environment in which the device is located; and displaying an indicator on a display that indicates a distance from a source of the audible signal. wherein the distance is based on a magnitude associated with the electronic signal data and the audio response characteristics of the physical environment.

twenty two. "Apple Patent | Showing context in a communication session》

In one embodiment, the method described in the patent provides a representation of at least a portion of a user within a 3D environment other than the user's physical environment. A representation of another object of the user's physical environment is shown to provide a scenario based on detecting conditions. As examples, a representation of a seating surface may be shown based on detecting that the user is seated, a representation of a table and coffee cup may be shown based on detecting that the user is reaching for a coffee cup, a representation of a second user may be shown based on detecting a sound or that the user is turning their attention to a moving object or a sound, and a depiction of a puppy may be shown when a puppy's barking is detected.

twenty three. "Apple Patent | Tangibility visualization of virtual objects within a computer-generated reality environment》

In one embodiment, the patent describes techniques for providing tangible visualization of virtual objects within a computer generated reality (CGR) environment. Visual feedback indicative of tangibility is provided for virtual objects within the CGR environment, the virtual objects not corresponding to real tangible objects in a real environment.

twenty four. "Apple Patent | Context-based object viewing within 3d environments》

In one embodiment, a view of the 3D environment is presented. Then, a scenario associated with viewing the one or more media objects within the 3D environment is determined. Based on the scenario, a viewing state of the media object for viewing one of the one or more media objects within the 3D environment is determined. Based on the determination that the viewing state is a first viewing state, the data associated therewith is used to render the media object within the 3D environment to provide an appearance of depth.

25.《Apple Patent | Warping an input image based on depth and offset information》

In one embodiment, the method described in the patent includes obtaining, via an image sensor, an input image including an object; obtaining depth information characterizing the object, wherein the depth information characterizes a first distance between the image sensor and a portion of the object; determining a distance distortion map of the input image based on the depth information and a function of a first offset value characterizing an estimated distance between the user's eye and the display device; setting operational parameters of the electronic device based on the distance distortion map; and generating a distorted image from the input image by setting the electronic device to the operational parameters. distortion map setting an operating parameter of the electronic device; and generating the distorted image from the input image by the electronic device set to the operating parameter.

In one embodiment, the electronic device described in the patent detects user gaze toward a first virtual object in the three-dimensional environment while displaying a three-dimensional environment including one or more virtual objects. The gaze satisfies a first criterion and the first virtual object is responsive to at least one gesture input. In response to detecting the gaze satisfying the first criterion, the electronic device displays an indication of the one or more interaction options available to the first virtual object in the three-dimensional environment, based on a determination that the hand is in a predefined state of readiness for providing the gesture input; and the electronic device abandons displaying the indication of the one or more interaction options available to the first virtual object, based on a determination that the hand is not in a predefined state of readiness for providing the gesture input. options.

27.《Apple Patent | Creation of optimal working, learning, and resting environments on electronic devices》

In one embodiment, the system described in the patent provides a computer generated reality (CGR) environment. wherein a user engages in an activity, recognizes a cognitive state of the user based on data about the user's body (e.g., working, studying, resting, etc.), and updates the environment with a surrounding that promotes the user's cognitive state with respect to the activity.

28.《Apple Patent | Display systems with optical sensing》

In one embodiment, the head-mounted device described in the patent may have refractive lenses, each refractive lens comprising a partial reflector, a quarter wave plate, and a polarizer. The optical system in the head-mounted device may have an infrared light emitting device and an infrared light sensing device. The optical system may illuminate a user's eye in a viewport and may collect measurements from the illuminated viewport for use in eye tracking and other functions. The optical system can be operated through a refractive lens. In order to enhance the performance of the optical system, the polarizer may be a line-grid polarizer formed from wires exhibiting enhanced infrared transmission, and/or the quarter-wave sheet may be formed from a cholesterol-type liquid crystal layer. The cholesterol-type liquid crystal layer is used as a quarter-wave sheet at visible wavelengths and not as a quarter-wave sheet at infrared wavelengths.

29.《Apple Patent | Controlling a user selection queue》

In one embodiment, the method described in the patent includes displaying a computer-generated reality object in a CGR environment; the CGR object representing a physical item. ; synthesizing an availability associated with the CGR object; detecting inputs pointing to the availability; and in response to detecting the inputs, adding an identifier identifying the physical item to a user selection queue.

30.《Apple Patent | Gaze behavior detection》

In one embodiment, an example process may include obtaining eye data associated with the gaze during the first time period; obtaining head data (e.g., head position and velocity) associated with the gaze during the first time period; and determining a first gaze behavioral state during the first time period to identify a gaze shift event, a gaze hold event, and a loss event.

31.《Apple Patent | Controlling a device setting using head pose》

In one embodiment, the head-mounted device described in the patent may use head posture changes for user input. In particular, a display in the head-mounted device may display a slider having an indicator. The slider may be a visual representation of a scalar amount of a device setting such as volume or brightness. Based on changes in head posture, the position of the device-set scalar and indicator on the slider may be updated. A direction of head movement may correspond to a direction of movement of the indicator in the slider.

32.《Apple Patent | Electronic devices with deformation sensors》

In one embodiment, the head-mounted device described in the patent may have a head-mounted support structure, the head-mounted support structure configured to be worn on a user's head. The head-mounted device may have stereoscopic optical components, such as left and right cameras or left and right display systems. The optical components may have respective left pointing vectors and right pointing vectors. Deformation of the support structure may cause the camera pointing vectors and/or the display system pointing vectors to become misaligned. Sensor circuitry such as strain gauge circuitry may measure that the pointing vectors are not aligned. The control circuitry may control the camera and/or the display system to compensate for the measured changes in the misalignment of the pointing vectors.

33.《Apple Patent | Electronic device with lens position sensing》

In one embodiment, a head-mounted device may have a display having an array of pixels displaying content for a user. A head-mounted support structure in the device supports the pixel array on the user's head. A left locator may be used to position a left lens assembly including a left lens and a first pixel array. A right localizer may be used to position a right lens assembly including a right lens and a second pixel array. The control circuitry may use interpupillary distance information to adjust the position of the left lens assembly and the right lens assembly. Sensing circuitry, such as force sensing circuitry, may be used to ensure that the lens assembly does not exert excessive force on the nose.

34.《Apple Patent | Prescription lens enrollment and switching for optical sensing systems》

In one embodiment, the system may perform a method to recognize when one or more lenses are an incorrect prescription for a user. The system notifies the user to switch prescription lenses or initiate an alignment process for the lenses. The system may identify a prescription for the lenses and compare the identified prescription to one or more registered prescriptions of the user. The system may notify the user to switch prescription lenses when the identified lens prescription does not match the user's expected registered prescription.

35.《Apple Patent | Augmented reality maps (Apple Patent: AR Maps)》

In one embodiment, the handheld communication device may capture and display a live video stream. The handheld communication device detects a geographic location and camera orientation of the handheld communication device. A route from the geographic location of the handheld communication device to the point of interest is recognized. The captured video stream is visually enhanced with an indicator indicating a direction of travel to the point of interest. The indicators are superimposed on the captured live video stream.

36.《Google Patent | Device tracking with integrated angle-of-arrival data》

In one embodiment, the head-mounted display recognizes device attitude based on a combination of angle-of-arrival (AOA) data generated by, for example, an ultra-wideband (UWB) positioning module and inertial data generated by an inertial measurement unit IMU. The head-mounted display fuses the AOA data with the inertial data using data integration techniques.

37.《Google Patent | List navigation with eye tracking》

In one embodiment, the technology described in the patent enables displaying on a display a plurality of locations arranged around a center location, said plurality of locations comprising at least one empty location; and the filled locations displaying a first subset of the list elements, said filled locations comprising adjacent locations to said at least one empty location. Movement of the gaze point relative to the filled positions and around the center position toward the at least one empty position may be tracked. The at least one empty position may be filled with a list element of the list element and the neighboring positions may be emptied so as to display a second subset of the list element.

38.《Google Patent | Volumetric performance capture with neural rendering》

In one embodiment, the techniques described in the patent may include using a light table and using depth data corresponding to the subject from an infrared camera to initially obtain images from a plurality of viewpoints and depicting the subject under various lighting conditions. A neural network may extract features of the object from the image based on the depth data and map the features to a texture space. The neural renderer may be used to generate an output image depicting the object from the target view, such that the illumination of the object in the output image is aligned with the target view. The neural rendering may resample features of the object from the texture space to the image space to generate the output image.

39.《Sony Patent | Image generation apparatus, image generation method, and program》

In one embodiment, the image generation apparatus described in the patent includes a player identification portion configured to identify a body of a player, a viewpoint acquisition portion configured to acquire viewpoint information including a viewpoint position and a viewpoint direction, a mesh generation portion configured to generate a mesh structure of the player reflecting a skeleton of the player's body based on the identification, and an image generation portion configured to generate an image of a player's body by rendering the mesh structure of the player and a virtual object that is rendered when viewing the player's body from the viewpoint information in the viewpoint direction. viewpoint position when viewed in the viewpoint direction to generate an image, and an image generation portion configured to superimpose the rendered virtual object on the rendered mesh structure of the player.

In one embodiment, the information processing apparatus described in the patent includes: a contour extraction unit that extracts a contour of the subject from a pickup image of the subject; a feature quantity extraction unit that extracts a feature quantity by extracting sampling points from the points constituting the contour: an estimation unit that estimates a pose that is highly matched to serve as a pose of the subject; and an estimation accuracy of the estimation unit that determines the estimation accuracy of the estimation unit using the cost of the match when the estimation unit performs the estimation a determination unit for the estimation of the estimation unit.

41.《Sony Patent | Haptic system and method》

In one embodiment, the haptic feedback method described in the patent comprises: providing a wearable haptic feedback device comprising one or more bone conduction haptic feedback units, and driving said one or more bone conduction haptic feedback units with at least a first signal processed using a bone conduction head-related transfer function "bHRTF".

42.《Sony Patent | Display apparatus (Sony Patent: Display apparatus)》

In one embodiment, the display device described in the patent includes a display unit group. The group of display units includes at least two or more groups of display units arranged in a circumferential direction. Each group of display units includes a screen and a reflective holographic lens, wherein an object image is projected onto the screen from a projection device, and the reflective holographic lens diffracts the object image and transmits the object image to the pupil of an observer.

43.《Sony Patent | Information processing apparatus, information processing method, and recording medium》

In one embodiment, the patent describes an information processing apparatus comprising: an acquisition portion that acquires an image captured by a predetermined imaging portion; an estimation portion that estimates a first position and a second localization in real space; and a measurement portion that measures a distance between the first position and the second position based on the estimation.

In one embodiment, the patent describes a method for categorizing virtual reality content for use in a head-mounted display. Said method comprises accessing a model identifying a plurality of learned patterns associated with the generation of corresponding baseline VR content that may cause discomfort; executing a first application to generate a first VR content; extracting data associated with simulated user interactions of the first VR content, the extracted data being generated during the execution of the first application; comparing the extracted data with the model to identify one or more patterns in the extracted data that match one or more patterns from the at least one of the learned patterns of the model, such that the one or more patterns may cause discomfort.

In one embodiment, the method described in the patent includes capturing an image and associating the image with a camera pose; determining, for each camera, a first contribution of an image for a first virtual view to be displayed on a first monitor, and a second contribution of that image for a second virtual view to be displayed on a second monitor; for each camera, determining a first confidence map for a first virtual view by using, for each camera, a first confidence map for a second virtual view based on the camera pose and the position of the camera relative to the first virtual camera to determine a first confidence map for the first virtual view; and a second confidence map for the second virtual view based on the camera pose and the position of the camera relative to the second virtual camera; generating the first virtual view by combining the first contribution using the first confidence map for each camera; and generating the second virtual view.

In one embodiment, a method for processing a security virtual event by an electronic device within a virtual environment includes receiving at least one input from a first user avatar to create at least one virtual event within the virtual environment; identifying at least one second user avatar for participating in the at least one created virtual event based on the received input from the first user avatar and a nature of the virtual event; and determining at least one security policy corresponding to the action associated with and the at least one security policy corresponding to at least one action associated with the at least one first user avatar, and at least one parameter to create the virtual event.

47.《Samsung Patent | Display apparatus for providing expanded viewing window》

In one embodiment, the patent describes a display device capable of providing an extended viewing window. Said apparatus comprises a light guide plate, the light guide plate comprising a coupler and a coupler; and an image providing apparatus providing an image. Said coupler may comprise a plurality of sub-couplers, said sub-couplers configured to propagate images provided from the image providing apparatus at different angles in the light guide plate.

48.《Samsung Patent | Display module and head-mounted display device therewith》

In one embodiment, the patent describes a display module comprising a plurality of light emitting elements and a sealing element sealing said plurality of light emitting elements. The sealing element comprises a base portion and a cover layer, the base portion comprising a transparent material. Said cover layer is in contact with a surface of said base portion and comprises a plurality of first patterns and a plurality of second patterns, each of said first patterns being intaglio-engraved to a first depth, and each of said second patterns being intaglio-etched to a second depth different from said first depth.

49.《Qualcomm Patent | Protection of user's health from overindulgence in metaverse》

In one embodiment, the patent describes a system that prevents a user from overindulging in the metaverse based on user biometrics (e.g., blood oxygen saturation, heart rate, body temperature, blood pressure, etc.). If the biometrics indicate that the user's health is impaired by the meta-universe presentation, the meta-universe presentation may be altered to minimize the user's overindulgence.

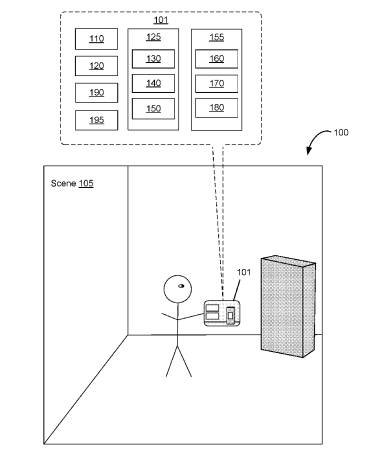

50.《Qualcomm Patent | Object detection and tracking in extended reality devices》

In one embodiment, the patent describes methods for detecting and tracking objects in an extended reality environment. For example, a computing device may detect an object in a placement region of a hybrid environment. In response to the detection, the computing device may determine at least one parameter of the object, may align the object based on the at least one covariate, and may track movement of the object based on the alignment. In additional embodiments, the computing device may capture at least one image of the object, may generate a plurality of data points based on the at least one image, may generate a multi-dimensional model based on the plurality of data points, and may generate a plurality of action points based on the multi-dimensional model. The computing device may track movement of the object based on the plurality of action points.

51.《Qualcomm Patent | Physical layer association of extended reality data》

In one embodiment, the patent describes a method for wireless communication. Said method may comprise a user equipment (UE) receiving first control information, said first control information comprising a first packet data unit (PDU) set identifier (ID) associated with a set of distinct PDUs that collectively represent data units for decoding. Upon receiving the first control information, the UE may identify, based on the first control information, that the PDU is part of a different set of PDUs corresponding to the first set of PDU IDs, and determine whether to include said PDUs in communication with a network entity based on the PDUs that are part of the different set of PDUs.

52.《Qualcomm Patent | Methods and apparatus for foveated compression》

In one embodiment, the system described in the patent may present the at least one frame including the display content at a server; scale down the at least one frame including the display content; may transmit the scaled down at least one frame including the display content to a client device; encode the scaled down at least one frame including the display content; decode the encoded at least one frame including the display content; and may upscale the at least one frame including the display content.

53.《Snap Patent | Whole body segmentation》

In one embodiment, the system performs operations comprising: receiving a monocular image comprising a depiction of a full body of a user; generating a segmentation of the entire body of said user based on said monocular image; accessing a video feed comprising a plurality of monocular images received prior to said monocular image; smoothing the segmentation of the entire body generated based on the monocular image using the video feed to provide a smoothed segmentation; and based on the smoothed segmentation applying the one or more visual effects applied to the monocular image.

54.《Snap Patent | Augmented reality image reproduction assistant》

In one embodiment, a computing application image replication assistant is configured to assist a user in replicating a digital image to a physical canvas using a conventional medium on the physical canvas. The image replication assistant utilizes augmented reality to present features of the digital image projected onto the physical canvas. The image replication assistant detects previously generated markers in the output of a digital image sensor of a camera of a computing device and uses the detected markers to calculate planes and boundaries of the surface of the physical canvas. The image replication assistant uses the calculated planes and boundaries to determine a position of the digital image on a display of the computing device.

55.《Snap Patent | Double camera streams》

In one embodiment, an image enhancement effect is provided at a device including a display and a camera. A first stream of image frames captured by the camera is received and an augmented reality effect is applied thereto to generate an augmented stream of image frames. The stream of enhanced image frames is displayed in real time on the display. A second image frame stream corresponding to the first image frame stream is simultaneously saved to an initial video file. The second image frame stream can later be retrieved from the initial video file and the augmented reality effect applied to it independently of the first image frame stream.

56.《Snap Patent | Virtual selfie stick》

In one embodiment, the patent describes a method for generating a virtual selfie stick image. Said method comprises generating a raw self-portrait image at a device utilizing an optical sensor of the device; the optical sensor pointing toward a user's face; the device being held at a position one arm's length from the user's face; directing said user to move the device in a plurality of poses and at an arm's length by an instruction; accessing image data generated by said optical sensor at said plurality of poses at said device; and generating a self-portrait image of a virtual selfie stick based on said raw selfie image and said image data to generate a selfie image of a virtual selfie stick.

57.《Snap Patent | Applying pregenerated virtual experiences in new location》

In one embodiment, the system performs operations comprising selecting, via a message sending and receiving application, a virtual experience representing a previously captured real-world environment at a first location; accessing an image representing a new real-world environment at a second location, said image depicting a plurality of real-world objects; receiving an input selecting a first real-world object from among the plurality of real-world objects depicted in the image; and, based on the virtual experience modifying the image accessed at the second location to depict a previously captured real world environment having the first real world object.

58.《Snap Patent | Inferring intent from pose and speech input》

In one embodiment, the system performs an operation comprising receiving an image depicting a person, identifying a set of skeletal joints of the person, and recognizing a pose of the person depicted in the image based on a positioning of the set of skeletal joints; receiving a voice input comprising a request to perform the AR operation and a fuzzy intent; recognizing a fuzzy intent of the voice input based on the pose of the person depicted in the image and in response to receiving the voice input, performing the AR operation based on recognizing a fuzzy intent of the voice input based on the pose of the person depicted in the image the pose of the person depicted in the image recognizing the ambiguous intent of the voice input to perform the AR operation.

59.《Snap Patent | Light estimation method for three-dimensional (3d) rendered objects》

In one embodiment, the patent describes a method comprising generating an image using a camera of a mobile device; accessing a virtual object corresponding to an object in the image; recognizing an illumination parameter of the virtual object based on a machine learning model pre-trained with a paired dataset; the paired dataset comprising synthetic source data and synthetic target data, said synthetic source data comprising a map of an environment and a 3D scans, said synthetic target data comprising a synthetic sphere image rendered in the same environment map; applying said illumination parameters to said virtual object and displaying the shaded virtual object as a layer of the image in a display of said mobile device.

60.《Snap Patent | Robotic learning of assembly tasks using augmented reality》

In one embodiment, the patent describes a method comprising displaying a first virtual object in a display of an AR device; tracking a manipulation of the first virtual object by a user of the AR device using the AR device; identifying, based on said tracking, an initial state and a final state of said first virtual object, said initial state corresponding to an initial pose of said first virtual object, said final state corresponding to a first virtual object final pose; and by demonstrating the use of said tracking of the manipulation of the first virtual object to program a robotic system, a first initial state of the first virtual object and a first virtual object final state.

61.《Snap Patent | Colocated shared augmented reality》

In one embodiment, the patent describes a method and system for creating a shared AR session. Said method performs operations comprising: receiving, by a client device, input for selecting a shared AR experience from a plurality of shared AR experiences; determining, in response to receiving said input, one or more resources associated with the selected shared AR experience; determining, by the client device, that two or more users are located within a threshold proximity of the client device; and activating the selected shared AR experience in response to determining that said two or more users are located within said client device to activate the selected shared AR experience within a threshold proximity degree of the client device.

62.《Snap Patent | Low-power hand-tracking system for wearable devices》

In one embodiment, the patent describes a method for a low power hand tracking system. Said method includes polling a proximity sensor of a wearable device to detect a proximity event; said wearable device comprising a low power processor and a high power processor to operate a low power hand tracking application on said low power processor in response to detecting the proximity event based on proximity data from said proximity sensor; and ending operation of the low power hand tracking application in response to at least one of: detecting and recognizing a gesture based on the proximity data, detecting without recognizing a gesture based on the proximity data, or detecting a lack of activity during a timeout period based on the proximity data from a proximity sensor.

63.《Snap Patent | Augmented reality waveguides with dynamically addressable diffractive optical elements》

In one embodiment, the patent describes a waveguide comprising a waveguide body, the waveguide body comprising an optically transmissive material having a refractive index that is different from that of the surrounding medium and defining an output surface. The waveguide body is configured to propagate light by total internal reflection in one or more directions substantially tangential to the output surface. The waveguide includes one or more diffractive optical elements (DOEs) and a DOE driver, each diffractive optical element configured to change its diffraction efficiency in response to a respective excitation.

64.《Snap Patent | Color calibration tool for see-through augmented reality environment》

In one embodiment, the patent describes a color calibration system configured to allow a user to adjust a hue/temperature parameter while a computing device see-through display displays a virtual color reference card. The virtual color reference card corresponds to a physical color reference card placed in front of the AR glasses. Based on user adjustments made through the color calibration UI, the color calibration system makes changes to the color properties of the perspective display. The user can continue to adjust the properties of the see-through display by interacting with user-selectable elements in the color calibration UI until the color of the virtual color reference card superimposed on the field of view of the AR glasses wearer matches the color of the physical color reference card as seen by the AR glasses wearer.

65.《Snap Patent | Augmented reality riding course generation》

In one embodiment, the AR-enhanced gameplay includes a map of the ballpark including a plurality of virtual objects and defining a track for the participant's personal mobility system, such as a scooter. The virtual objects are displayed in a field of view of the participant's augmented reality device. A proximity of the participant or his or her personal mobility system to a location of the virtual objects in the real world is detected, and a performance characteristic of the participant's personal mobility system is modified in response to the detection of the proximity.

66.《Snap Patent | Wearable device location systems》

In one embodiment, the patent describes a location management process system for use in wearable electronic devices. When the high-speed circuitry and the location circuitry are bootstrapped from a low-power state, the low-power circuitry manages the high-speed circuitry and the location circuitry to automatically provide location-assisted data from the high-speed circuitry to the low-power circuitry upon the initiation of a locate-positioning operation. The high speed circuitry returns to the low power state prior to completing the positioning and after capturing the content associated with the initiation of the positioning. The high-speed circuitry updates the location data associated with the content after completion of the location positioning.

67. TheLumus Patent | Head-mounted display eyeball tracker integrated system》

In one embodiment, the patent describes a head-mounted display having an eye tracking system. The head-mounted display of which it is a part comprises a light-transmitting substrate having two main surfaces and edges, optical means for coupling light into said substrate by total internal reflection, a partially reflective surface carried by the substrate that is not parallel to the main surfaces of the substrate, a source of near-infrared light, and a source of display that is projected within the phototropic spectrum.

68. TheLumus Patent | Optical system including selective illumination》

In one embodiment, the at least one processor is configured to select a light source from a plurality of light sources based at least in part on the relative position of the pupil of the eye. The selected light source is configured to irradiate a portion of the eye movement frame with a light beam corresponding to the position of the pupil. Said at least one processor is simultaneously configured to activate the selected light source to irradiate said portion of the eye movement frame.

69. TheLumus Patent | Optical-based validation of orientations of surfaces》

In one embodiment, the method described in the patent comprises: providing a sample, said sample comprising an externally flat first surface and an externally flat second surface, said second surface nominally tilted at a nominal angle with respect to said first surface; generating a first incident beam (LB) directed toward the first surface and a second incident LB parallel to the first incident LB; obtaining a first return LB by reflecting the first incident LB away from the first surface; obtaining a first return LB; obtaining a second return LB by collapsing the second incident LB at a nominal angle, reflecting the collapsed LB away from the second surface, and bending the reflected LB at a nominal angle; measuring a first angular deviation between the returned LBs; and deriving an actual inclination angle between the first-second surfaces based at least on the measured first angular deviation.

70. TheVuzix Patent | Image light guide with zoned diffractive optic》

In one embodiment, an image lightguide for transmitting a virtual image includes a waveguide, a coupled-in diffractive optic operable to direct an image-carrying beam into the waveguide, and a coupled-out diffractive optic operable to direct the image-carrying beam from the waveguide toward a viewport. Said coupled-out diffractive optics has two or more regions, each region comprising a set of diffractive features, wherein a first region comprises a first set of diffractive features and a second region comprises a second set of diffractive features and is disposed adjacent to the first region. The coupled diffractive optics includes a first interface region formed by the first region and the second region, and the first interface region includes a first set of subregions and a second set of subregions. The first set of subregions includes a first set of diffractive features, and the second set of subregions includes a second set of diffractive features.

71. TheHTC Patent | Head-mounted display device and display method therefor》

In one embodiment, the patent describes a head-mounted display device including a display, a lens set, and an actuator. The display is used to generate an image beam. The lens group is provided between the display and a target area and is adapted to focus the image beam to form a display image in the target area. An actuator is coupled to the display or the lens group to periodically move the display or the lens group in a plane.

72. TheMagic Leap Patent | Metasurfaces for redirecting light and methods for fabricating》

In one embodiment, the patent describes an optical system comprising an optically transmissive substrate, the substrate comprising a sub-surface, said sub-surface comprising a grating containing a plurality of crystalline cells. Each crystal cell includes a first nanobundle having a first width; and a second nanobundle having a second width greater than the first width. The cells are spaced from 10 nm to 1 .mu.m. The substrate has a refractive index greater than 3.3 when the heights of the first and second nanobundles are from 10 nm to 450 nm; and a refractive index less than or equal to 3.3 when the heights are from 10 nm to 1 .mu.m. The substrate has a refractive index greater than 3.3 when the heights of the first and second nanobundles are from 10 nm to 450 nm.

73. TheMagic Leap Patent | Eyepiece For Virtual, Augmented, Or Mixed Reality Systems》

In one embodiment, the patent describes an eyepiece waveguide for augmented reality. Said eyepiece waveguide may comprise a transparent substrate, said transparent substrate having a coupling-in region, first and second orthogonal optical pupil extender (OPE) regions, and an exit pupil extender (EPE) region. The coupling-in region may be disposed between the first and second OPE regions and may divide and redirect the input beam into first and second guided beams propagating inside the substrate, wherein the first guided beam is directed to the first OPE region and the second guided beam is directed to the second OPE region. The first and second OPE regions may divide the first guided beam and the second guided beam, respectively, into a plurality of replicated, spaced-apart beams.The EPE region may redirect the replicated beams from the first and second OPE regions such that they exit the substrate.

In one embodiment, the patent describes methods for selecting a depth plane in a display system such as an augmented reality display system. The display may present virtual image content to a user's eye via image light. The display may output the image light to the user's eye, the image light having different amounts of wavefront divergence corresponding to different depth planes at different distances from the user. The camera may capture an image of the eye. An indication of whether the user is recognized can be generated based on the acquired image of the eye. The display may output image light to the user's eye. wherein the image light has different amounts of wavefront dispersion based at least in part on the generated indication.

In one embodiment, the patent describes an optical system comprising an optically transmissive substrate, the substrate comprising a sub-surface, said sub-surface comprising a grating containing a plurality of crystalline cells. Each crystal cell includes a first nanobundle having a first width; and a second nanobundle having a second width greater than the first width. The cells are spaced from 10 nm to 1 .mu.m. The substrate has a refractive index greater than 3.3 when the heights of the first and second nanobundles are from 10 nm to 450 nm; and a refractive index less than or equal to 3.3 when the heights are from 10 nm to 1 .mu.m. The substrate has a refractive index greater than 3.3 when the heights of the first and second nanobundles are from 10 nm to 450 nm.

76. TheMagic Leap Patent | Virtual and augmented reality systems and methods》

In one embodiment, the patent describes a virtual reality or augmented reality display system that controls power inputs to the display system based on image data. The image data itself comprises a plurality of image data frames, each image data frame having a constituent color component of the rendered content and a depth plane for display on the rendered content. A light source or spatial light modulator relaying illumination from a light source may receive signals from the display controller to adjust power settings of the light source or spatial light modulator and/or control the depth of the displayed image content based on control information embedded in the image data frames.

77. TheNiantic Patent | Localization using audio and visual data》

In one embodiment, a reference image of the environment of the client device and a recorded sound are obtained. The recorded sound may be captured by a microphone of the client device some time after the client device generates the localization sound. The reference image and the recorded sound may be used to determine a location of the client device in the environment.

78. TheNiantic Patent | Compact head-mounted augmented reality system》

In one embodiment, the head-mounted device described in the patent may include a front portion, a rear portion, and one or more straps. The front portion may include an optical display. The optical display is configured to output image light to a user's eye. The rear portion may be arranged with the front portion to balance the weight of the headset. The one or more bands connect the front portion and the rear portion such that the two portions are located on either side of the user's head.

Related posts