Vision Pro Hands-On Update: New Improvements After Half a Year of Optimization

CheckCitation/SourcePlease click:theverge

(XR Navigation Network January 17, 2024)尽管苹果在2023年6月首次发布VisionProThe company has already provided the opportunity to experience the device in real life, but after a half year of optimization, the device has been improved. That's why the company is offering another hands-on experience on January 16th, US local time.

现在,The Verge分享了他们在1月16日再次体验Vision Pro时的体验:

这是纽约市一个泥泞的下雪天——我通常会朝窗外看,然后宣布这是在家工作的一天。但今天,我把自己拖到潮湿的寒冷中,因为苹果给我(Victoria Song)和我的同事尼莱·帕特尔(Nilay Patel)提供了苹果Vision Pro的演示时间。

Nile and others have tried the Vision Pro, but for most others the $3,499 Vision Pro has been shrouded in mystery. However, as I realized after half an hour of use, the Apple headset felt more familiar than I expected. Selecting the correct Light Seal via an iPhone facial scan is very similar to setting up Face ID. Putting it on your head is no different than any other VR headset, except that its design and fabric headband are more Apple-esque. As with any other VR headset, putting it on makes you feel like it's going to sit on your head and ruin your hairstyle (if you have long hair like I do, you'll feel like it's curling up in the back).

Once you put on the headset, you'll need to complete a simple eye-tracking setup: look at the various dots and lightly close your fingers. Then visionOS will allow you to access the app launcher, which looks very similar to the Launchpad launch pad on a Mac computer. The only difference is that you can still see the room around you in visionOS, if you wish. On the top of the right hand side there is a digital crown. As an Apple Watch user, I'm very familiar with this. You can use it to center the home screen or immerse yourself in a virtual environment. There's a button on the back for taking photos and videos of the space, and it again looks very similar to the Apple Watch's side buttons.

As with the previous demo, visual tracking is fast and accurate. All you have to do is look at a menu item or button and the system instantly highlights it. As my eyes roamed through the Apple TV app, they highlighted movie titles. Apple lets us open a virtual keyboard in Safari to navigate a website. It works great, but it's a little clunky: You look at a letter and pinch your fingers together to select it. You can type as fast as your eyes can move.

I had a bit of trouble with the pinch and double pinch gestures at first because I was apparently pinching and closing too long when trying to select. I was then told to just lightly merge and release, just like double-tapping on the Apple Watch.

The demo we got was similar to Nile's experience at WWDC, except Apple added a little something different. Even so, reading about Nile's hands-on experience a couple months ago and seeing it for myself are two completely different things. I knew how exaggerated the screen specs were. But even knowing that, none of my eyes were really ready for two 4K screens with 23 micron pixels in one eye. To avoid dry eyes, I had to remind myself to actively blink.

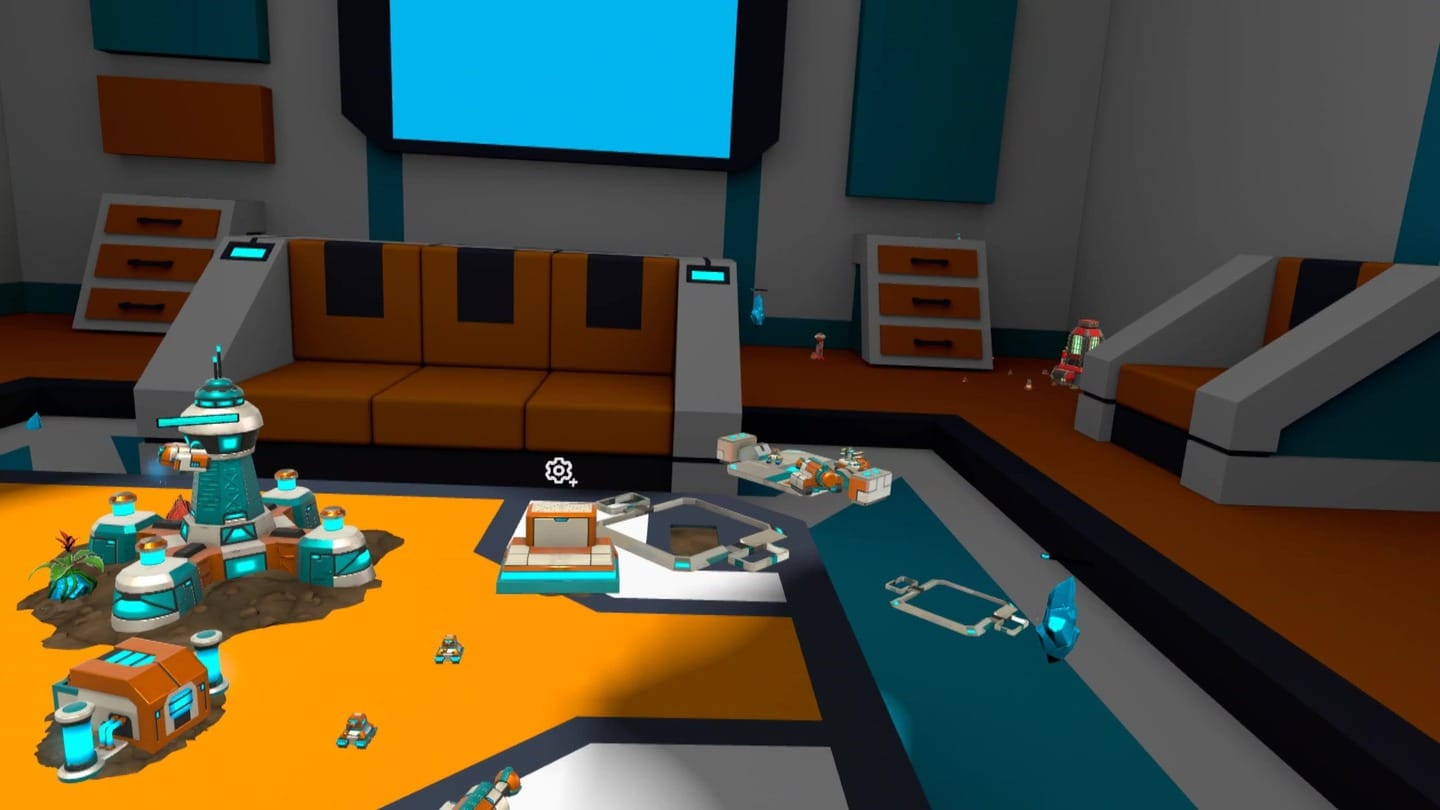

Vision Pro中的虚拟世界感觉像是Meta尝试用QuestA higher resolution version of the implementation, but with a much more powerful M2 based computer built in. I can put an app to my upper right so I can look up at the ceiling, and if I want, I can view photos at the same time. Ripping the tires off an Alfa Romeo F1 car in JigSpace was fun. There's a real novelty in opening the Disney + app and watching Star Wars trailers in a virtual environment that looks like the planet Tatooine. In fact, I did shrink back when the Tyrannosaurus Rex and I locked eyes. The virtual environment of the Haleakala volcano, on the other hand, surprised me because the textures of the rocks looked very realistic. It was all very familiar. Apple did a great job without any delays.

Apple let us put the spatial videos and panoramas we'd taken into Vision Pro to view them, and the results were compelling, but they worked best when the camera was stationary. Nile shot a certain amount of spatial video where he deliberately moved the camera around to follow his kids around the zoo and felt the familiar VR motion sickness. Apple says they're doing everything they can to minimize the problem, but apparently specific photos work better when viewed in spatial form than others. So, we'll have to continue the experience outside of Apple's carefully controlled environment so we can really figure out its limitations.

Apple has always emphasized that the Vision Pro isn't meant to cut you off from the world; the display on the front of the headset is meant to keep you connected to others. That's why we saw the EysSight demo: when onlookers look at the Vision Pro-wearing user, they see the area around their eyes on the front display. When Apple's Vision Pro demonstrators blink, we can see a virtual version of their blink. When they're looking at an app, the blue light seems to indicate that their attention is elsewhere.

While they enter a completely virtual environment, the screen turns into an opaque shimmer. If you start talking to them while they're watching a movie, their virtual eyes appear in front of you. When they take a photo of the space, you'll see the screen flicker like a shutter.

That's all very well and good, but it's a very strange feeling to be wearing a headset and have no idea what's going on with the monitor in front of you. What's even weirder is that his virtual eyes pop up from the screen when you're looking at the real him. Social cues like this take a long time to sort out. I spent half an hour skimming through scenes like a kid staring at an alien planet (I never left the couch).

But at the end of my demo, I started to feel the weight of the headset bringing me back to the real world. I kept frowning and I felt a slight headache. When I took off the heads-up display, that tension went away. But as I walked back to Manhattan, I kept replaying the presentation in my mind. I knew what I had just seen, I was just still trying to find scenarios where it could be used in the real world.

Related posts